AI Governance Framework: Compliance, Ethics & Audit Trails | 2025-26 Guide

The Silent Crisis in AI Adoption: An Introduction

Your business just put a machine learning model in place to look at job applications. Two weeks later, you find out that it’s turning down qualified women applicants at twice the rate of men. Your executive team is in a panic. The law wants answers. People in charge are asking questions. And you know that there is no audit trail that shows how the algorithm made its choices, so you can’t prove what went wrong or why.

This is not a made-up situation. Companies in all fields are facing a reckoning: they made AI systems without the right safety measures. They moved quickly and used a lot of them, but now they’re trying to figure out what their own algorithms really do.

The technology isn’t the problem. It’s the lack of rules.

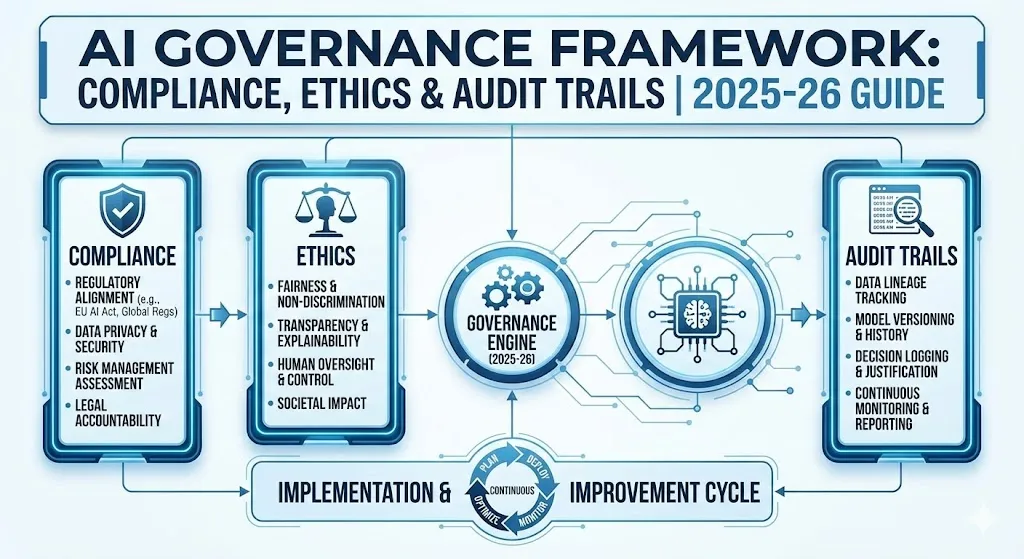

You’re probably thinking about model accuracy, deployment speed, and cost if you’re building or using AI in your business. You might not be thinking about it, but you should be: governance. AI governance isn’t just a bunch of rules for the sake of rules. It’s the difference between new ideas that build trust and new ideas that break it. The infrastructure is what makes sure your AI is ethical, follows the rules, and is responsible.

In this post, you’ll learn:

We’re going to talk about what AI governance really means, why it matters more than you think, and how to make it a part of your business without slowing down innovation. You’ll learn about the main frameworks that experts use, such as the NIST, the EU AI Act, and India’s new guidelines. You’ll also learn how to spot bias before it hurts real people, how to keep audit trails that regulators expect, and most importantly, why many AI governance efforts fail quietly in organizations and how to avoid that trap.

By the end, you’ll have useful ideas for how to put AI governance into practice in your setting. There’s something here for everyone, whether you’re a startup, a big business, or a government agency.

1. What is AI governance, and why is it more important than you might think?

Because “AI governance” is used in different ways, let’s start with the basics.

AI governance is the set of rules, policies, and structures that tell your company how to create, use, and oversee AI systems. It’s about making sure that AI works in a way that is ethical, open, and follows the rules, all while allowing for new ideas. You can think of it like the guardrails on a highway. You’re not stopping people from speeding; you’re making sure they don’t drive off a cliff.

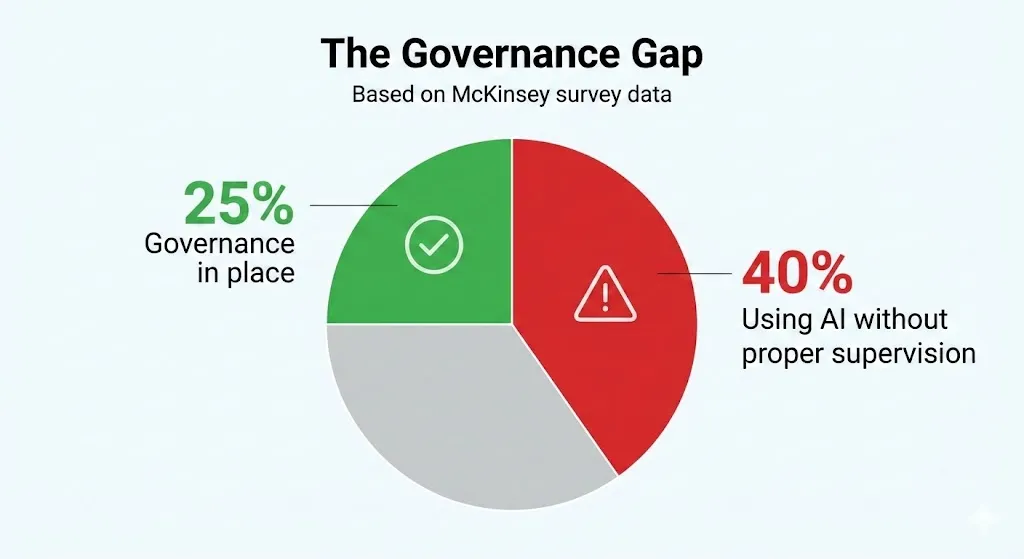

The stakes are high. According to a McKinsey survey, only 25% of businesses have actually put AI governance frameworks into place, even though 65% of them use AI for at least one important task. This means that about 40% of businesses are using AI without proper supervision. They’re working in a governance vacuum, and they may not even know how dangerous it is.

This is important because:

There is more and more pressure from regulators. The EU AI Act now requires high-risk AI systems to have audit trails. The SEC is looking closely at how financial companies use AI to make decisions. India just put out detailed rules for AI governance that are meant to protect people while also encouraging new ideas. If you can’t show that you have controls in place, your business could be fined a lot of money.

When governance fails, trust goes down. Hiring algorithms that are not fair. Facial recognition systems that don’t work for people with darker skin tones. Credit-scoring systems that are unfair. These aren’t just one-time things; they’re patterns. When an AI system acts unethically, it hurts people’s trust in the technology as a whole. That makes it harder for you to hire good people, get customers, and run your business without having to worry about the rules all the time.

In fact, governance is a competitive edge. Companies with mature AI governance can move faster because they have the right systems in place. They find problems early on. They don’t panic when rules change. They keep good workers because they trust the company’s commitment to doing the right thing.

The main point is that AI governance isn’t just a box to check for compliance. That’s how you make AI that lasts and helps your business and society.

2. The Five Pillars of AI Ethics: Laying the Groundwork for AI That Is Responsible

Ethics is the base, and governance is the framework. Without ethics, there can’t be any governance.

There are five main parts to responsible AI, and they all depend on each other.

Responsibility

Someone has to take responsibility for the outcome. If your AI model makes a choice that hurts someone, regulators won’t accept “the algorithm decided.” They’ll want to know who gave the model the go-ahead. Who is keeping an eye on it? Who is to blame if it doesn’t work?

To be accountable, you need to make sure that everyone in your organization knows what their role and duties are. It means that someone has the power to say no to a model if it doesn’t meet your moral standards. It means keeping track of everything so that you can find out who made decisions when questions come up—and they will.

Clear and open

People say that AI systems are like “black boxes.” You give them data, and they make a decision, but no one knows why.

Transparency changes that. It means that your AI systems can tell you why they did what they did. The system tells you why your loan application was turned down. When a hiring algorithm flags a candidate, it writes down why. People and stakeholders can trust you more when you are open and honest.

This is where explainable AI (XAI) comes in. It’s a group of methods that help people understand how AI makes decisions. You’re going from a “black box” to a “glass box.”

Being fair and not biased

Fairness means treating everyone the same, no matter what their race, gender, or age is. It sounds easy. But AI systems can learn to keep up with past discrimination if they are trained on biased data.

To be fair, you need to carefully curate your data, use a variety of datasets, and keep an eye on things all the time. To make sure your models work fairly for everyone, you need to test them on different demographic groups. This is not a one-time check. It keeps going.

Dependability

Can people count on your AI to always work? Or does performance change a lot depending on the situation?

Reliability means that your AI system always gives you the same, expected results. It means keeping an eye on performance over time and catching drift, which is when a model’s accuracy goes down because the data in the real world has changed. Unreliable AI can hurt people in healthcare, finance, or law enforcement.

Safety and Privacy

Your AI system could handle private business data, sensitive customer data, or employee information. You have to keep it safe. Privacy means only collecting and using the data you need, getting permission to do so, and encrypting data when it is not being used and when it is being sent. Security means protecting against attacks, stopping people from getting in without permission, and making sure that your training data is safe.

These five pillars are all connected. They talk to each other. You can’t really hold people accountable if you don’t let them see what you’re doing. You can’t say something is fair if you don’t talk about data quality and privacy. Putting them all together makes a clear ethical framework for your AI systems.

3. Audit Trails: The Key to Being Responsible and Following the Rules

Regulators are now asking this question: Can you show how your AI came to that conclusion?

You have a problem if you can’t.

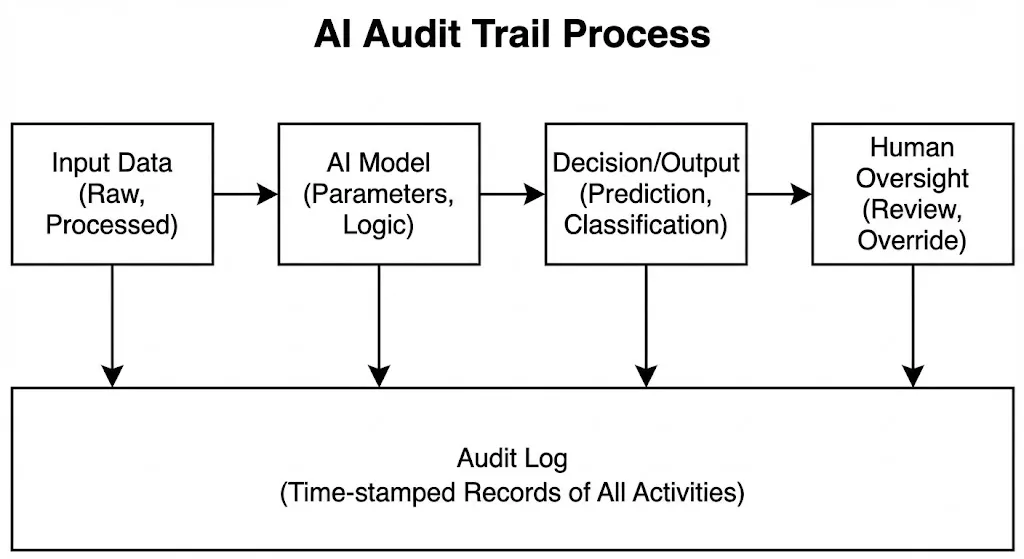

An AI audit trail is a complete log of everything that happened while an AI system was running. It has inputs, outputs, how the model acted, the logic behind each decision, who used the system, when it was changed, and what happened.

It’s like the black box on a plane. If something goes wrong, investigators can find out what happened, when it happened, and why it happened. Regulators, auditors, and your own teams can all do the same thing with AI audit trails.

What is a full audit trail?

Audit trails show the whole chain of custody for decisions and data. They write down:

What information went into the model?

Data transformations: How did you clean, process, and get the data ready?

Model parameters: What options did the algorithm use?

Decision logic: What rules or weights were used to make each guess?

What choice was made and why?

Human oversight: Who looked over or changed the AI’s decision?

Performance metrics: Did the model act the way it was supposed to?

It is very valuable. Audit trails help you find bias and strange things. A detailed audit trail shows exactly when a model started making unfair decisions and what changed in the system. They help people follow the rules. You can show auditors or regulators everything when they ask questions. They make it possible to look into what happened after the fact. You can find out what went wrong in production instead of just guessing.

There is a real regulatory mandate.

The EU AI Act says that high-risk AI systems must have audit trails. The SEC is getting closer to making it mandatory to disclose the use of AI in making financial decisions. The U.S. FTC is looking into whether algorithmic decision-making is unfair. For industries that are regulated, like finance, healthcare, and insurance, full audit trails are going from “nice to have” to “required by law.”

And here’s the thing: it’s a lot easier to set up audit trails from the beginning than to add them later. Companies that wait until they are audited or sued will wish they had built audit features into their systems from the start.

4. AI Governance Frameworks: Which One Should You Use?

Your company needs some help. The good news is that there are a number of official frameworks. The problem is that there are a lot of frameworks, and each one has a different focus. Let’s go over the most important ones.

Table: Quick Comparison of Frameworks

| Framework | Focus Area | Best For | Status |

| NIST AI RMF | Risk Management & Trustworthiness | US Organizations, Startups to Enterprises | Voluntary |

| EU AI Act | Regulation & Public Safety | Companies doing business in EU | Mandatory Law |

| ISO/IEC 42001 | Management Systems (Process) | Certification seekers | Global Standard |

| OECD Principles | High-level Values | Policy alignment | International Goal |

| India AI Guidelines | Innovation & Safety Balance | Companies in/targeting India | Advisory/Future Law |

The NIST AI Risk Management Framework

The National Institute of Standards and Technology (NIST) made a voluntary framework that helps people find and deal with AI risks. It stresses the importance of trustworthiness and tells businesses to regularly check for possible AI risks and how to deal with them.

The NIST framework is not a strict list; it can change. The idea behind it is that businesses should know how their AI systems work, figure out what risks they pose, and take steps to reduce those risks that make sense for their situation. This makes it useful for businesses of all sizes, from small startups to large corporations.

EU AI Law

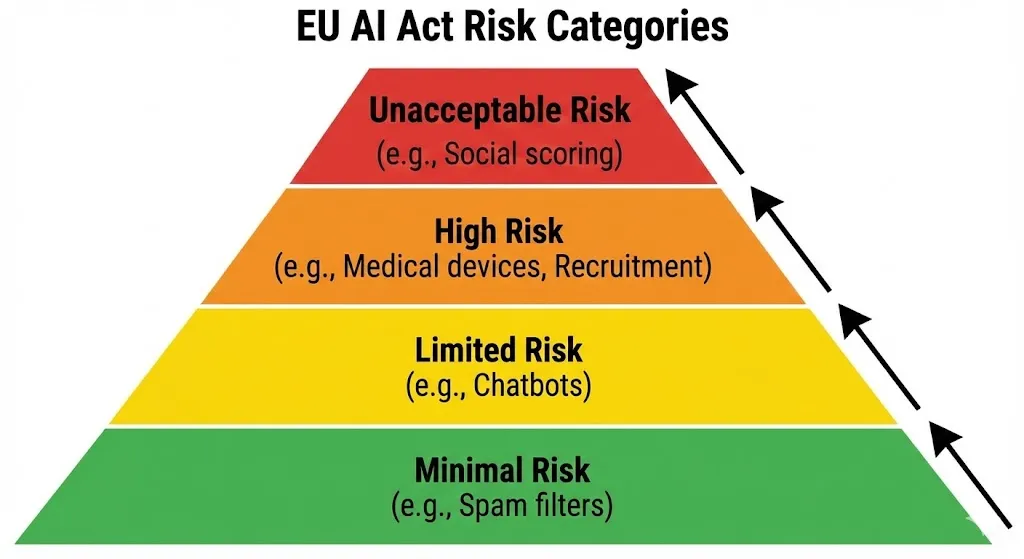

The EU AI Act is not the same. It is a law that all EU countries must follow. It puts AI systems into groups based on how risky they are and makes stricter rules for systems that are high-risk, like those used for hiring, making credit decisions, or law enforcement.

The EU Act says that high-risk AI needs a lot of paperwork, checks for compliance, human oversight, and strong audit trails. The EU AI Act is stricter than NIST. Instead of saying, “Here’s a framework to think about,” it says, “These are the rules you must follow.” If you sell AI in Europe or process European data, you must follow the EU AI Act.

ISO/IEC 42001: Systems for Managing AI

ISO 42001 is a worldwide standard for setting up and keeping an AI management system (AIMS) in your business. It uses the “Plan-Do-Check-Act” method that most companies already use for quality management.

The standard includes context, leadership, planning, support, operations, performance evaluation, and making things better. It’s certifiable, which means that outside auditors can check to see if you’ve done it right. This makes it appealing to businesses that want to make sure their AI governance is correct by getting outside help.

Principles for AI from the OECD

The OECD got together with people from different countries and groups to come up with global rules for AI that can be trusted. The principles stress values that are fair, open, responsible, and centered on people. They aren’t strict rules; they’re more like goals. But they are important because they show that governments and business leaders agree on them.

Guidelines for AI Governance in India

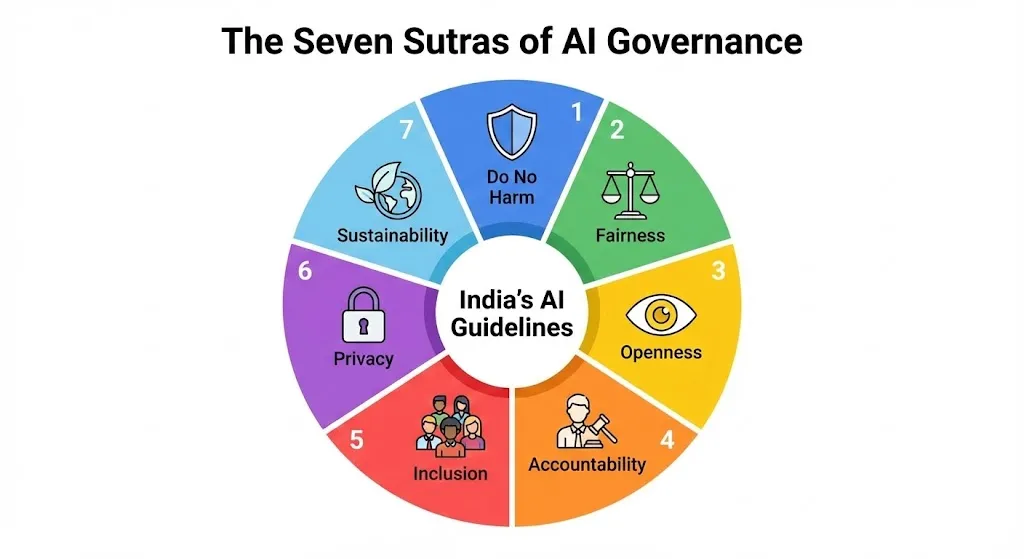

India released detailed AI governance guidelines in November 2025 that strike a balance between encouraging new ideas and reducing risks. The “Seven Sutras,” or guiding principles, are: Do No Harm, fairness, openness, responsibility, privacy, and sustainability.

The framework has three parts: enablement (building infrastructure and capacity), regulation (setting rules and reducing risk), and oversight (making sure institutions are accountable). India’s focus on flexible and adaptive governance is what makes it stand out. The rules suggest “sandboxes” where companies can test AI in controlled settings while still making sure that risks are kept to a minimum.

What kind of framework should you use?

To be honest? You can choose more than one.

If you’re a multinational, you’re probably trying to follow the EU AI Act (because the rules are strict).

ISO 42001 is becoming more popular if you want standards that are recognized around the world.

NIST is great if you want a flexible way of thinking that focuses on risk.

The India AI guidelines are becoming more and more important if you work in India or do business there.

OECD principles help organizations and governments talk to each other.

Smart companies follow the strictest rules that apply to them (like the EU AI Act if they have customers in Europe) and then make sure their practices are in line with other frameworks to make them even better.

5. The Important Result of Split Ownership: Why AI Governance Efforts Fail Quietly

Here’s a sad fact: by 2027, 60% of AI projects will not reach their value goals. The main reason for this is poor governance. By 2027, 30% of enterprise generative AI projects are expected to stop because of bad governance and not enough risk management.

The problem isn’t usually a lack of intent. Businesses really want to be responsible when it comes to AI. The issue is how the organization is set up. When too many people are in charge of AI governance, something bad happens: no one is really in charge.

Let me show you what I mean with a real example.

Your business starts using an AI tool to hire people. The team that works on data science made it. It will be used by the HR team. The legal team has to say it’s okay. The compliance team needs to keep an eye on it. The infrastructure is taken care of by the IT team. The team that looks at risk does. There are six teams, many departments, and no one person in charge.

The model starts to show bias after a month. Someone sees it. But who is in charge of fixing it? The data science team says, “We built what HR asked for.” HR says, “The data science team promised us it was fair.” Legal wants paperwork that was never made. The compliance department didn’t have any monitoring dashboards set up. The blame game goes on while the fix is put off.

This is the problem with fragmentation.

Research demonstrates that dispersing governance responsibilities among excessive roles results in three significant failures:

Holes in accountability: When many people are “responsible,” no one is really responsible. Decisions take a long time. Things don’t get better. Responsibility becomes spread out, and in spread-out systems, nothing moves quickly.

Standards that aren’t always the same: Different teams have different rules for how to run things. Data science and law both have different ideas of what “fair” means. There is a third thing that risk management does. When standards don’t match up, the result is inconsistency, having to do things over, and blaming others.

Taking too long to make a decision: With governance based on consensus, everything has to be in line. It takes a while for six teams to agree on anything. Late, if at all, are important decisions made. The AI system, on the other hand, keeps running even though there are still risks.

“One person should own AI governance” is not the answer.

You really do need teams that work across departments. Data science needs feedback on bias. Legal needs to hear from people about compliance. HR needs help with deployment. But you need to make sure that everyone knows who owns what in that collaboration.

The best companies do this: they give a senior executive, like a Chief AI Officer or VP of AI, the power and resources to make decisions about governance. That person is in charge of a team of people from different fields, but they are ultimately responsible. They’re the ones who choose when to move forward and when to hold back. They are the ones who bring problems to the board. They are responsible.

IBM‘s research found that 60% of executives who have clear AI governance champions say their companies do better than those that don’t. The structure is important.

6. Case study from the real world: Canada’s Department of Fisheries and Oceans (DFO)

Let’s see how one group put AI governance into practice and what they learned the hard way.

The Department of Fisheries and Oceans in Canada wanted to use AI to make things work better. They started a number of AI projects at the same time, working quickly and keeping their focus on new ideas. You mean well, right?

They hit a wall.

Projects did well in the proof-of-concept stage, but not when they were put into use in the real world. The team figured out that they needed something: a governance infrastructure. They didn’t have written rules for approving models, checking the quality of data, assessing risk, or handing off work from development to production.

Here’s what DFO did that was different:

First, they explained what AI governance really is. Not a vague promise. A clear, written definition of what their AI governance covers.

Second, they looked at how things were going right now. They checked how well they could handle data quality, manage risk, document models, and keep an eye on people. They found holes all over the place. They could manage their data, but not as well as they wanted to.

Third, they did something different: they chose a starting point that was less risky on purpose. Instead of trying to fix everything at once, they picked data quality use cases—problems that used non-sensitive data but still provided value. This gave them a safe place to work on governance without the stress of following the rules for high-risk systems.

Fourth, they built the infrastructure for governance at the same time as they worked on the model: They made role matrices that showed who was in charge of what at each stage of the AI lifecycle. They came up with responsible AI principles and put them into action by making playbooks and self-assessment questionnaires. They made an AI governance operating model that made it clear how projects would go from development to testing to production. They set up a way for new AI projects to fit in with governance.

What happened? DFO had set up the governance structures they needed by the time they were ready to start making models. They didn’t have to deal with the expensive failures they had in past projects. They showed that structured governance speeds up deployment by stopping problems before they happen.

This is an important lesson: you don’t do governance after you come up with something new. You need to include it in your plans for innovation from the start. If you do it right, it doesn’t slow you down; it speeds you up by keeping you from having to do things over and over and taking risks.

7. How to Build Your AI Governance Team: Why Different Skills Are Important

Who should be on your AI governance team?

You’re halfway there if you think “data scientists and compliance people.” But you’re missing important points of view.

IBM calls the group that is needed for good AI governance a “Dream Team.” This is a group of people from different fields who can spot problems that teams that are all the same can’t.

People who should be there:

Data scientists and engineers: know how models work, where data biases come from, and what is technically possible. Without them, governance is no longer connected to the real world.

Compliance and legal experts: know the rules, how to write down decisions, and how to deal with liability. They help turn ideas into rules.

Ethicists: are people who think about how things affect society, how fair they are, and what will happen in the long run. It’s hard to find this point of view, but it’s very useful.

Business leaders: are people who know your company’s strategy and can make sure that AI governance is in line with business goals. Governance that doesn’t take into account how business works will fail.

HR and organizational development: If you’re using AI to hire people or manage employees, you need to think about HR issues. They know how choices affect people.

People who know a lot about your field: if you work in healthcare, you need doctors or nurses. You need people who know how financial systems work if you work in finance. They know how AI affects the real world.

Risk management experts: think about things like second- and third-order effects, failure scenarios, and long-term risk to the organization.

IBM’s research found that companies with AI governance teams made up of people from different fields find problems 40% faster than those with teams made up of people from the same field and come up with better solutions.

The problem is getting everyone to work together. How do you get this many points of view involved without making everything go so slowly?

The answer is clear ownership with input from everyone. Governance is the responsibility of one senior leader. They talk to the team of experts from different fields. They decide what to do. They talk back. It’s faster than just agreeing, but stronger than making a decision by yourself.

8. The Seven Sutras: A Look at India’s AI Governance Philosophy

The new AI governance rules for India, which came out in November 2025, have a unique approach that is worth learning about even if you don’t live in India. They show a way of thinking that is becoming more global.

The “Seven Sutras” are the seven main ideas that make up the guidelines.

Do No Harm: The most important rule. Before using any AI, ask yourself, “What harm could this do?” Who’s at risk? What can we do to lessen it?

Fairness: AI systems should treat everyone the same. Regular audits should look for unfair results among different groups of people. Datasets should be varied and representative.

Openness: Users should know how AI affects them. There should be a way to explain decisions. It is important to write down processes. It’s not about making everything perfectly clear; it’s about making sure that stakeholders can see things clearly.

Accountability: Organizations need to make it clear who is in charge of AI systems. Someone should be responsible for the results. There should be ways for people who are affected to appeal decisions or report harm.

Inclusion: AI development and governance should include a wide range of people. There shouldn’t be just one type of developer. Civil society, affected communities, and marginalized groups should all be part of the governance process. When the team doesn’t have all the points of view, it can lead to biases in the systems.

Privacy: Your personal information should be kept safe. You should only collect what you need, use it for the purposes you say you will, and keep it safe. It is expected that you will follow the GDPR, PDPA, and other similar rules.

Sustainability: AI systems should be built and run in a way that is good for the environment. This includes being computationally efficient (lowering energy costs and environmental impact), being able to last a long time, and being in line with society’s sustainability goals.

The balance in India’s approach is what makes it interesting. The rules stress the importance of new ideas and “sandboxes” where businesses can test AI in safe settings. But they also require that risks be reduced. You can be flexible, but not at the cost of safety.

9. The Compliance Reality: The EU AI Act, New Rules, and What’s Next

It’s not just a buzzword anymore. It’s necessary for the business to run.

The EU AI Act is the best set of rules for AI right now. It sorts AI systems into groups based on how risky they are:

AI that is not allowed: Some AI uses, like social credit systems or some emotional recognition uses, are completely banned. You can’t use them in the EU.

High-risk AI: needs a lot of management, such as detailed records, checks to make sure they are following the rules, human oversight, strong audit trails, and performance monitoring. Some examples are AI used for hiring, making credit decisions, law enforcement, immigration, and important infrastructure.

Limited-risk AI: must be open about its requirements. People who use AI need to know that they are. Some paperwork is needed.

AI with low risk: needs less oversight.

The compliance burden is very high for systems with a lot of risk. You will need to keep records of training data, keep audit trails, have people review things, and show that your system doesn’t discriminate. This isn’t just a one-time check for compliance. It’s always watching and getting better.

The United States is going in the same direction, but with a more sectoral approach.

The SEC has given advice on how to use AI in making financial decisions. The FTC is looking into algorithmic bias. Congress is thinking about passing a full set of AI laws, but no federal law has passed yet. That means that different states and agencies are following different rules.

It’s clear that rules about AI will keep getting stricter.

Companies that set up governance infrastructure now won’t have to rush to follow the rules later. They won’t be adding audit trails to systems that weren’t meant to be audited. They’ll have the paperwork that the government wants. They’ll be ready for anything that comes next.

10. Detecting, auditing, and stopping bias before it hurts

You can’t completely get rid of bias in AI because it’s a technical issue. It’s a problem with the system that needs to be managed all the time.

There are many places where bias can come from:

Biased training data: If your training data shows that discrimination has happened in the past, your model will learn from it and make it worse. Your AI will pick up on the biases of the people who made the hiring decisions if most of your hiring data comes from those decisions.

Data imbalance: If your dataset doesn’t have enough people from certain demographic groups, your model won’t work as well for those groups. A facial recognition system that mostly learns from light-skinned faces will not work as well on darker-skinned faces.

Flawed algorithms: Sometimes the algorithm itself is biased because of how it was made or what it gives priority to.

Bias in data collection: How was the data gathered? Who was a part of it? If the collection process left out some groups, bias is already there.

This is how to find and stop bias in a practical way:

Do it actively: Don’t wait for bias to hurt someone. Set up bias metrics before you deploy. Make dashboards that show how well the model works for different groups of people. Look at the accuracy, precision, recall, and false positive/negative rates for different protected traits, like race, gender, age, and so on. Do this all the time, not just once when you launch.

Check your work often: Regular audits catch things that are going wrong. At first, a model might work well, but as the data distribution changes, it might start to show bias. Plan formal audits every three, six, or twelve months, depending on the level of risk. Put your systems through a red team test. Try to find ways they could discriminate on purpose.

Test in different situations: It’s not always clear when someone is biased. Try out your model on different groups of people, in different parts of the world, and in different situations. A hiring algorithm might be fair in general, but it might not be fair to older workers. A credit model might work well for mortgages but not for car loans.

Include different points of view: Teams that are all the same often miss clear biases. Include people from the communities that are affected in the decision-making process. Ask them: What could go wrong? What are you concerned about? This catches things that data alone can’t.

Fix the information: When bias is found, the data is often the source of the problem. Be more careful when you choose your training data. Balance it out so that all demographic groups are fairly represented. If it’s appropriate, use synthetic data to add to groups that aren’t well represented. Get rid of any proxies for protected traits in the data.

Mark some features with a red line: There are some situations where certain features should never be used. Don’t use the candidate’s name (which can stand in for race) to train a hiring algorithm. If zip code stands in for race, don’t use it in a credit model.

Add explainability to the mix: You can check a model for bias if you know why it made a choice. Use explainability methods to show which factors led to each choice. This makes it easier to see bias.

Keep an eye on it all the time: Bias doesn’t only happen when you’re training. You can make feedback loops that make bias worse if you use your model’s predictions to make decisions in the real world and those decisions go back into the training data for the next time. Keep an eye on the results of decisions all the time. Look into it if you see that a certain group of people is being unfairly treated on a regular basis.

11. The Human-in-the-Loop: Why You Can’t Make Accountability Automatic

Here’s a tempting thought: make an AI governance system that runs itself. Let the algorithms keep an eye on each other.

Don’t do that.

Automation is useful for keeping an eye on things, sending alerts, and doing initial screenings. But people need to make the final decisions about who is responsible. This is called “human-in-the-loop” governance.

Why?

Because there are times when you have to make a judgment call. An AI model uses patterns in data to guess that a loan applicant is high-risk. Is that prediction right, or is it biased? A machine can figure out risk. Someone needs to decide if it’s fair. An AI system says that a hiring algorithm is turning down more women than men for a certain job. Is this unfair treatment or a true picture of how many people work in that field? Someone needs to look into it and make a decision.

The best organizations make processes that:

It is possible and encouraged for people to override. People can appeal an AI decision if they don’t agree with it. People can take things to the next level if they see a pattern that worries them.

It is required to explain things. AI outputs come with explanations that people can understand. This isn’t “the algorithm said so.” It’s “here’s the reasoning, here’s the data that drove it, and here’s what we did to check for bias.”

Different points of view look at important decisions. A diverse group of people looks over an AI system before it is used to make important decisions about hiring, credit, healthcare, or other things. They ask tough questions. They put assumptions to the test. They make sure that oversight isn’t just a review by one person.

There are clear paths for escalation. Someone knows to escalate an AI system if it starts acting strangely. If a pattern of bias starts to show up, there is a clear way to get to the people in charge of making decisions who can stop or change the system.

People learn how to use AI. Your decision-makers and governance team need to know how AI systems work, what their limits are, and where bias and other problems can happen. You don’t have to be a data scientist to do this. You need to know enough to ask good questions.

IBM’s research found that companies that have strong human-in-the-loop governance get better ethical results than companies that try to automate governance. The human part isn’t a problem; it’s a feature.

12. How to Put AI Governance into Action in Your Business

Theory is important. What really changes things is implementation. Here is a clear plan for how to set up AI governance in your business.

Step 1: Get everyone on the same page with leadership (Weeks 1–2) Make sure everyone in your C-suite is on the same page. AI governance only works if the top leaders are in charge of it. The CEO needs to be concerned. The board needs to care. Without that, people will see governance as an extra cost for IT, and it will be weakened when there is more pressure to innovate. Set up a meeting with your leadership team to do this. Share the information: 60% of AI projects fail because of poor governance. Requirements for following the EU AI Act. Trends in regulation. Argue that good governance is both a legal requirement and a way to get ahead of the competition.

Step 2: For Weeks 2–4, figure out what governance means in your business. Don’t use the same governance framework as another company. Make one. Which frameworks work well with the rules in your area? Most businesses should follow a mix of NIST, ISO 42001, and any relevant sector rules (like the EU AI Act if you have European customers or sector-specific rules in finance, healthcare, etc.). Put together your governance team. Look over the frameworks. Write a definition of AI governance that fits your business. Write it down in a one-page statement that everyone can read and remember.

Step 3: Look at how things are right now (Weeks 4–8) You can’t make things better if you don’t measure them. Find out where your organization stands on important governance areas by doing an assessment of them: Quality and management of data, Documentation and versioning of models, Finding and keeping an eye on bias, Keeping records and audit trails, Security and access controls, Ways to make sure you follow the rules, Duties and roles. Make a simple maturity model with three or four levels, such as Initial, Developing, Mature, and Leading. Give your organization a score on each dimension. Write down the gaps. This is what you need to do.

Step 4: Set roles and duties (Weeks 8–12) Make a RACI matrix (Responsible, Accountable, Consulted, Informed) that shows who is in charge of what in your AI lifecycle. Who gives the go-ahead for models to go live? Who checks for bias? Who takes action when a problem is reported? Who goes up to leadership? Talk to people from data science, compliance, legal, risk, business, and IT. Make a map of how decisions are really made for AI projects. Put it in writing. Say it. Make sure people are responsible for it.

Step 5: Choose a use case to start with (Weeks 12–16) Don’t try to control all of your AI at once. Choose one project to test out. Something with a moderate amount of risk and clear business value would be best. This is your proof of concept for AI governance. Pick a project to do this. Be extra careful when you work through governance processes. Write down everything. Find out what works. Find out what’s hard to do. Repeat. You’ll have a playbook for the next project after you’ve successfully run one.

Step 6: Add governance checkpoints to your development process (Weeks 16–24) You shouldn’t just add governance to AI after it’s been made. It should be part of the process from the start. Make checkpoints:

Intake: Review of governance before a project starts

Development: Regular reviews of the project’s governance as it moves forward

Before deployment: A final audit of compliance and bias before any system goes live

After deployment: regular audits and constant monitoring To do this, add governance gates to the workflow of your AI project. Teach teams how to do the process. Make it happen. A project can’t move forward if it doesn’t pass governance gates. It’s not punishment; it’s risk management.

Step 7: Set up audit trail systems (Weeks 24–32) You’ve set the rules for governance. Make it possible to check now. Set up logging and documentation systems that record: All the data that went into making the model, Versions and parameters for models, Results from training and testing, Performance measures for different demographic groups, Who looked at the model and when, Any changes to the model, The reasons behind production decisions. To do this, work with your data and IT teams to set up logging infrastructure. Pick tools that work with the technology you already have. Teach teams how to follow documentation standards. Make them follow through.

Step 8: Make dashboards for monitoring (Weeks 32–40) It’s not enough to just do governance once. It’s still going on. You need to be able to see how well your AI systems are working. Make or buy monitoring dashboards that show: How accurate the model is over time, Bias metrics for different groups of people, Strange things or behavior that isn’t expected, Status of compliance with regulations, Logs of system use and access. Set KPIs for each AI system. Pick a tool to keep an eye on things or make your own dashboards. Set limits for alerts. Set up what to do when alarms go off.

Step 9: Set up a governance council (Weeks 40–44) Set up a permanent group that meets regularly (once a month or once a quarter, depending on how quickly AI is being used) to talk about governance issues, approve new projects, and make decisions about governance policy. This is what you should do: Invite people from different departments. Add the senior executive in charge of AI governance. Set the limits. Make a charter. Have meetings on a regular basis. Decide and tell people what you want.

Step 10: Train the group and keep doing it (Ongoing) People’s understanding and support are what make governance work. Make sure that everyone who works with AI, from data scientists to product managers to executives, knows what the rules are. Based on what you’ve learned, keep making your processes better. Make training materials. Lead workshops. Make it easy for people to give feedback and suggest ways to make things better. Based on what you learn, keep changing your governance framework.

13. Seven Reasons Why AI Governance Efforts Often Stop and How to Avoid Them

We talked about how things can break apart. But there are other reasons why governance projects don’t work.

Beginning too big: Companies try to control all of their AI at once, but they get too busy. The range seems too big. Things stop moving forward.

Fix: Start with one or two use cases. Get things moving. Increase in small steps.

Separating governance from business value: Governance is seen as a “compliance burden” instead of a “path to better AI that drives business value.” Teams see it as a problem and work around it.

Fix: Make business goals the center of governance. “This will help us move into regulated markets because we’ll be compliant.” “This will help us deploy faster because we won’t have to do any rework.” Make the business case clear.

Not having enough skilled workers: To be a good leader, you need to know a lot about rules, data science, ethics, and managing projects. A lot of companies don’t have this knowledge.

Fix: Hire or make. At first, hire outside consultants. Put money into training. Work with colleges or research centers.

Making governance an IT issue: Governance is something that all organizations are responsible for. Business teams don’t take it seriously when IT keeps it to themselves. When it gets stuck in compliance, innovation teams see it as unfair.

Fix: Make it clear who is in charge of governance and make it a shared responsibility. Get business teams involved from the start.

Making governance infrastructure that is too strict: You make rules that worked for your first few AI projects and then stick to them for every project. But not all AI is equally dangerous. A hiring algorithm and a recommendation engine don’t need the same rules.

Fix: Create governance based on risk. Governance is less strict for low-risk projects. Governance is strict for projects that are high-risk. Change based on the real risk, not general rules.

Policy and practice don’t match up: You write rules for how things should be run. People don’t follow them. They aren’t enforced by leaders. Policies are no longer binding; they are only advice.

Fix: Put governance policies into action. Don’t just write them down; make them a part of your systems and workflows. Make them happen. Check for compliance. Make leaders responsible.

Trying to run old AI systems that weren’t made to be run by people: You have AI systems in production that don’t have clear ownership, audit trails, or proper documentation. It is hard to retrofit governance.

Fix: Accept that some old systems will be kept. But make sure that new systems are ready for governance from the start. You’ll slowly get rid of systems that don’t follow the rules and replace them with ones that do.

14. Five Common Questions About AI Governance

Q1: What is the most important effect of giving too many people the job of running AI? A: The most important effect is that it makes people less accountable. When several teams are in charge of AI governance, it becomes harder to make decisions. No one feels like they are in charge. Problems don’t get worse. The governance mandate gets weaker because different teams have different goals. This causes decisions to take longer, standards to be inconsistent, and, in the end, failures in governance. Studies show that companies that have one senior-level person in charge of AI governance do better than those that have multiple people in charge. The answer is clear responsibility with input from many different areas—one person is in charge of governance and makes the final decisions after consulting with others.

Q2: What does IBM say is necessary for good AI governance in a company? A: IBM says that three things are very important: First, a clear order from senior management. The CEO and board must think AI governance is important for it to work. Second, a governance team made up of people with different skills, such as data science, compliance, law, ethics, business, and risk. Teams that are all the same miss blind spots. Third, there should be structures of accountability in which someone is clearly in charge of making decisions about governance. IBM’s research shows that 60% of executives who have clear AI governance champions say their companies do better than those that don’t. Processes and tools are important, but so is the human element, which includes clear leadership, different points of view, and clear responsibility.

Q3: What is an AI audit trail, and why is it important? A: An AI audit trail is a complete, time-stamped record of everything that happened while an AI system was being built and used. It keeps track of input data, data transformations, model parameters, decision logic, outputs, who accessed the system, when it was changed, and what happened as a result. It matters because it makes people responsible. Regulators or auditors can find out exactly what went wrong and why if something goes wrong. Audit trails help find bias. If a model starts making decisions that are unfair, the trail shows exactly when the change happened. High-risk systems have to follow rules like the EU AI Act. And stakeholders are expecting them more and more to be the standard for responsible AI. Companies that don’t have audit trails can’t show that they have been responsible with their AI, which puts them at risk of legal and regulatory problems.

Q4: Why do so many AI governance projects stop working in organizations, and what is the main reason? A: The biggest reason is that governance and business value don’t match up. When governance is framed as “compliance burden” or “IT overhead,” teams see it as a problem and try to work around it. People don’t think governance is important because it doesn’t seem to have an effect on business results. The answer is to change the way governance is done to focus on business value: “This helps us deploy faster.” “This helps us move into regulated markets.” “This helps us build customer trust.” When governance is linked to strategy and business results, it gets resources, leadership attention, and team buy-in. If that alignment doesn’t happen, governance stays a goal rather than a reality.

Q5: What are the five main parts of AI ethics, and how do they work together? A: The five pillars are being responsible, being open, being fair, being reliable, and being safe and private. They are all connected. Accountability means making it clear who is responsible for the results of AI. Being open means making AI decisions clear. Being fair means treating everyone the same. Reliability means that something works the same way every time. Privacy and security mean keeping private information safe. You can’t deal with one pillar on its own. Fair AI that isn’t clear won’t make people trust it. If AI is open and honest but not responsible, it’s just honesty without responsibility. AI that violates privacy is a technical success but a failure for society. To have good AI ethics, all five pillars must be addressed at the same time and be part of the organization’s culture, policies, and governance structures.

15. In conclusion, the time of “Trust Me” AI is over.

We began with a situation in which your company used a biased hiring algorithm and has no way to prove why.

That situation is becoming less acceptable.

Rules are getting stricter from regulators. Customers want to know what’s going on. Workers want to know if their company’s AI is moral. Investors are looking closely at AI governance as a risk. The time when businesses could use AI without rules is coming to an end.

But here’s the good news: organizations that take the initiative on governance don’t have to deal with it. They go faster. They stay away from big problems. It’s easier for them to grow into regulated markets. They draw in talented people. They earn the trust of their customers.

Governance isn’t about putting limits on AI. It’s about creating AI systems that are safe, fair, easy to check, and in line with human values. That’s a good idea for innovation.

The frameworks are there. The EU AI Act, India’s guidelines, and NIST. The methods have been tested. We have tools for auditing, finding bias, and managing. What is needed is commitment from the organization.

You need AI governance if you want to make AI. Not to please auditors, but governance does that. Building AI without rules is like building a system you don’t know how to use or control. And that’s a risk that should be avoided.

Get started now. Choose a framework. Put together a group. Set roles. Choose a case to use. Learn. Repeat. Make governance a part of your organization’s DNA. When regulators ask questions, your future self will thank you for being able to give clear, complete answers. You can quickly and completely fix bias when you find it. You can tell them how the AI made that choice when they ask.