Edge AI: Using Phones and IoT Devices to Run Models

Your phone will unlock as soon as it sees your face. Your smartwatch can tell when your heart is beating too fast and warn you before you even feel dizzy. A factory camera can find a broken item on the assembly line in less than a second. These devices aren’t asking the cloud for help; they’re making decisions on their own, right there and then.

Welcome to the world of Edge AI, where AI isn’t just in a faraway data center; it’s also on your wrist, in your pocket, or watching your home. It’s quick, it’s private, and it changes how we use technology every day.

You’re in the right place if you’ve ever wondered how your phone can hear you even when you’re not connected to the internet, or how a security camera can tell the difference between your cat and a burglar without sending the video to the cloud. We’re going to explain Edge AI in a way that everyone can understand, even if they don’t have a PhD. You’ll find out what it is, why it matters, how businesses are using it now, and what the future holds. You’ll understand why this technology is quietly changing everything from healthcare to smart cities by the end.

1. What is Edge AI, exactly? (And Why You Should Care)

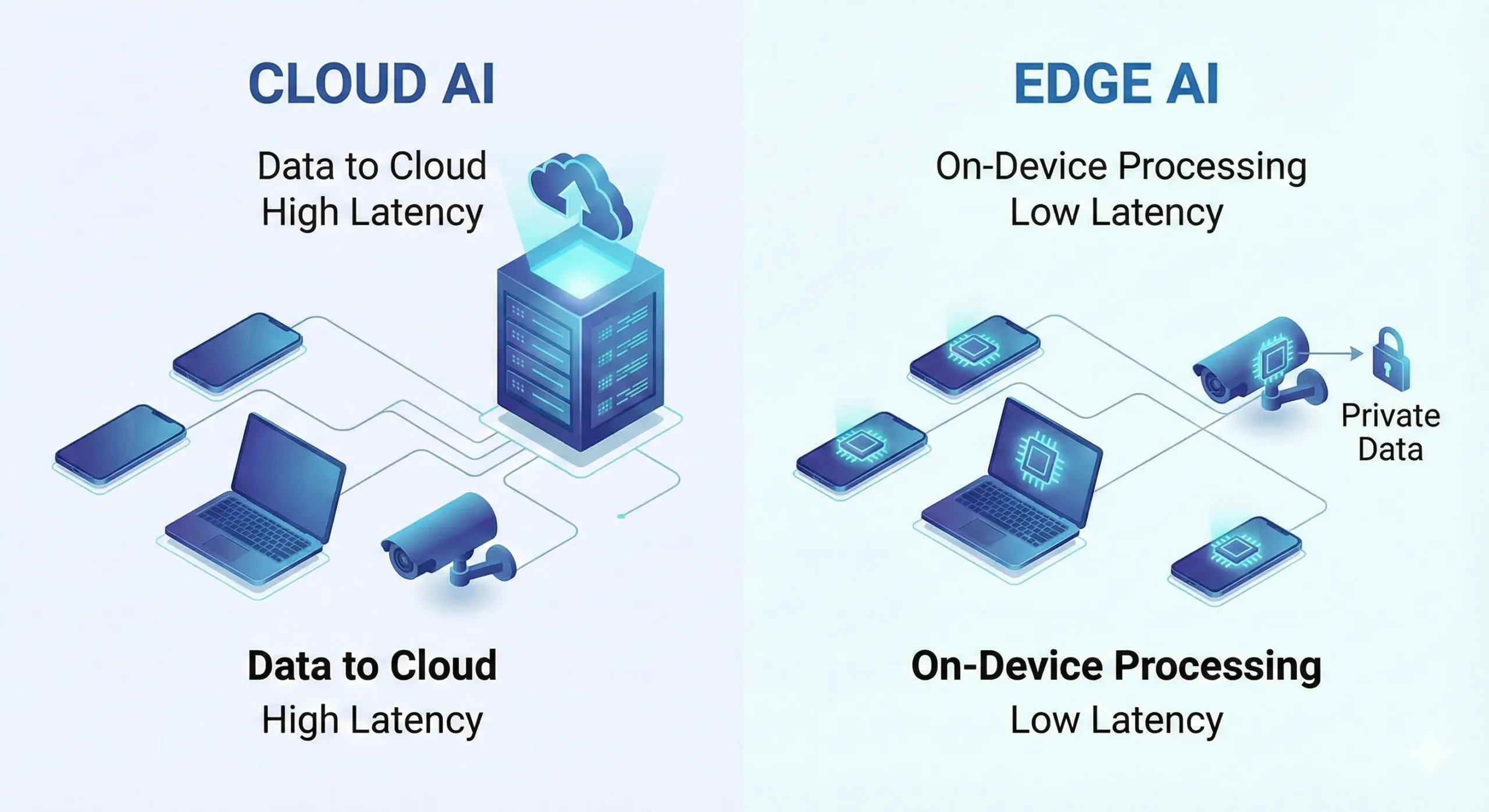

Let’s get started. Edge AI is a type of artificial intelligence that doesn’t use cloud servers to do the heavy lifting. Instead, it runs directly on devices like phones, smartwatches, security cameras, factory sensors, and cars.

When you need help, traditional AI is like calling a very smart friend who lives far away. You tell them what’s wrong, wait for them to think about it, and then wait for their answer to come back. Edge AI is like having a smart friend who lives with you. They are always there when you need them, they answer right away, and your conversation stays private.

The “edge” part is where data is made, which is at the edge of the network, near you. Your phone’s camera, your fitness tracker’s heart rate sensor, and your car’s radar are all examples of edge devices. Edge AI is when AI runs on these devices instead of in the cloud.

Why This Is More Important Than You Think

We have too much data, though. Some people think that IoT devices will make more than 79 zettabytes of data by 2025. That number is so big that it doesn’t mean much, but in practical terms, it means we can’t send all that data to the cloud. It would take too long, cost too much, and to be honest, most of it isn’t even worth sending.

Edge AI fixes this by processing data on the spot. Your security camera doesn’t send hours of footage showing nothing. It only alerts you when it sees something suspicious. Your smartwatch doesn’t send every heartbeat to the cloud. Instead, it looks for patterns locally and only tells your doctor when something is wrong.

2. The Secret Sauce: What Edge AI Really Does

Okay, let’s take a look under the hood. How does AI work on a device that fits in your pocket when traditional AI models need huge servers?

Models that are smaller and smarter

Making AI models that could fit on tiny chips was the first big step forward. Keep in mind that your phone doesn’t have the same processing power as a data center. Early AI models were huge, like hundreds of gigabytes. What are edge AI models? Some are only a few megabytes.

Researchers made architectures like MobileNet and other “lightweight” neural networks that are made just for edge devices. These models are built to be efficient from the ground up, not just smaller versions of bigger ones.

Model Compression: The Magic Tricks

Even models that are already small need to get smaller. That’s when optimization methods come in:

Quantization: is like making a high-resolution picture into a smaller file. Quantization changes 32-bit floating-point numbers into 8-bit integers instead of keeping them as they are. Floating-point numbers are very accurate but take up a lot of memory. This makes the model four times smaller and can speed up inference by up to 69%. Most of the time, you won’t even notice a drop in accuracy.

Pruning: gets rid of the “dead weight” in neural networks. Think of a tree that has some branches that don’t make fruit. You severed them. The same idea: pruning cuts out connections in the network that don’t help much with the end result. You can cut 30–50% of a model without hurting its performance, and in some cases, even up to 90%.

Knowledge Distillation: In Knowledge Distillation, a big, accurate “teacher” model teaches a smaller “student” model how to act like it does. The student learns the patterns without having to know all the details. It’s like learning to play guitar from Jimi Hendrix: you won’t be as good, but you’ll be pretty close, and you don’t need to have been doing it for decades.

The Evolution of Hardware

The chips themselves are also getting smarter. Neural Processing Units (NPUs) are special chips in today’s smartphones that do AI tasks without using up your battery. Some examples of specialized hardware made just for running AI at the edge are Google’s Edge TPU, Qualcomm’s AI Engine, and Apple’s Neural Engine.

These chips can do trillions of operations per second and only use a few watts of power. That’s enough power to run complicated computer vision models that would have needed a desktop GPU just five years ago.

3. The Tools That Make Edge AI Frameworks Work

When you talk about Edge AI, you have to talk about the software frameworks that make it work. These are the tools that developers use to turn their trained models into something that can run on your phone or other smart device.

| Framework | Best For | Key Feature |

| TensorFlow Lite | Mobile & Embedded Devices | Google’s standard; great optimization and hardware acceleration. |

| PyTorch Mobile | Research & Rapid Prototyping | Keeps PyTorch’s dynamic nature; easy transition from dev to deployment. |

| AI Edge Torch | PyTorch Users needing Speed | Converts PyTorch models to TFLite format. |

| ONNX Runtime | Cross-Platform Flexibility | Works with models from various frameworks; balances speed and flexibility. |

TensorFlow Lite: The Standard in the Business

Google’s answer for mobile and embedded devices is TensorFlow Lite, which has become the framework of choice for many developers. It works with both Android and iOS, has great optimization tools, and supports hardware acceleration very well. What are the bad things? One developer said that converting models can sometimes feel like trying to get an elephant through a keyhole.

TensorFlow Lite was able to process an image classification model on a Samsung S21 in just 23 milliseconds on a recent benchmark. That’s quick enough for apps that need to work in real time.

The Researcher’s Choice: PyTorch Mobile

Most research labs and many startups already use PyTorch, so PyTorch Mobile is appealing to them. It keeps PyTorch’s dynamic nature, which makes the process of going from development to deployment easier. Researchers could improve the model and have it on testing devices the same day, which was very helpful for one AR project that needed weekly model updates.

What do you have to give up? It takes a little longer than TensorFlow Lite and uses more memory.

The New Kid on the Block: AI Edge Torch

AI Edge Torch came out in 2024, and it’s a big deal for people who use PyTorch. It changes PyTorch models right into TensorFlow Lite format, so you get the best of both worlds: PyTorch’s flexibility when you’re developing and TFLite’s speed when you’re deploying.

ONNX Runtime: The Tool for Everything

ONNX Runtime is the cross-platform choice that can work with models from a number of different frameworks. It is in the middle; it is not as fast as TensorFlow Lite, but it is more flexible and easier to use on different platforms.

4. Real-World Edge AI Uses (That You Use Every Day)

Let’s get specific. Where is Edge AI being used right now?

Your smartphone is a powerful AI tool.

Your phone probably runs dozens of AI models all day long without you even knowing it:

Face Unlock uses deep learning models to find your face, pull out unique features (like the distance between your eyes), and compare them to stored templates—all in a matter of milliseconds. Face ID on Apple’s phones even has technology that stops someone from unlocking your phone with a picture.

Edge AI is very important for camera filters and enhancements. What are those effects in portrait mode? That’s an AI model that estimates depth in real time and blurs the background. Mode for night? AI combines multiple exposures and lowers noise on the device.

Edge AI is used by voice assistants to find wake words. Your phone only sends audio to the cloud after it hears “Hey Siri” or “OK Google.” It listens for these phrases locally. This keeps your information safe and saves battery life.

Smart Homes: Smartness at the Edge

Edge AI is making smart home devices even smarter:

Security cameras can now tell the difference between a person, a pet, and a tree branch blowing in the wind, and they don’t have to send the footage to the cloud. This can cut down on false alarms by as much as 40% in commercial settings.

Some advanced systems can even recognize faces on the spot by comparing live video to a database stored on the camera itself. The system lets you know right away if someone who isn’t supposed to be there shows up.

Smart thermostats and lights use edge AI to learn your habits and change settings automatically based on data about who is home and what the temperature is.

Healthcare: Saving Lives in a Matter of Milliseconds

Edge AI in healthcare is literally saving lives:

Wearable devices like the Apple Watch use edge AI to find irregular heart rhythms (AFib) by looking at heart rate patterns in real time. These devices can instantly tell when someone has fallen and let caregivers know before you even hit the ground.

Now, medical imaging machines can run diagnostic AI right on the scanner. A portable ultrasound with edge AI can quickly find problems, which is very important in emergencies or in rural clinics where the internet isn’t always reliable.

One study found that edge AI in healthcare cut the time it took to make a diagnosis by half, which meant that patients could be monitored more quickly and get better results.

The Smart Factory in Manufacturing

Factories are using edge AI for quality control and predictive maintenance:

Vision Systems uses cameras with edge AI to check thousands of products on assembly lines every hour, finding flaws in real time. One car factory’s defect detection system works on parts without sending image data to servers, which keeps private manufacturing data safe.

Sensors on equipment keep an eye on vibration, temperature, and sound patterns to find problems before they happen. An industrial vibration monitoring device that used edge AI was able to detect anomalies on the device itself by making inferences 40% faster and losing only 2% of accuracy.

What happened? Edge AI in manufacturing has cut down on unplanned downtime by as much as 40%.

Smart Cities: Cities with Smart Minds

Cities are using edge AI to control traffic, make it safer, and cut down on emissions:

Edge AI-powered cameras in traffic management systems change traffic lights in real time based on how busy the roads are. One city saw 20% less traffic and 15% better air quality after using an edge AI traffic solution.

The SURTRAC system in Pittsburgh showed 20–40% improvements in travel time, wait time, and speed, as well as a reduction in emissions of more than 20%.

Edge AI is used by surveillance systems to find strange activities in the area, which speeds up response time in emergencies. During Hajj 2025, Saudi Arabia used edge-enabled CCTV and AI-powered crowd management for millions of pilgrims. This made crowds move more smoothly and medical help get to people faster.

The Road Ahead for Cars

Edge AI is growing the fastest in the automotive industry:

Edge AI gives Advanced Driver Assistance Systems (ADAS) the ability to make decisions in a split second. Self-driving cars process terabytes of sensor data on their own. Sending it all to the cloud would cause unacceptable delays.

The market for automotive edge AI accelerators was $2.1 billion in 2024 and is growing at a rate of 22.9% per year. It is expected to reach huge proportions by 2034.

Farming with precision

Edge AI is changing farming with precision agriculture:

Drones and ground sensors keep an eye on the health of crops, the moisture in the soil, and the activity of pests in real time. Edge AI lets you analyze data on-site to help you decide how much fertilizer and water to use without having to be connected all the time.

This makes people more productive, wastes fewer resources, and has less of an effect on the environment.

5. The Benefits: Why Edge AI is Better than the Cloud

What is it about Edge AI that makes it worth all this work? It turns out, a lot.

- Speed: Every Millisecond CountsEdge AI cuts down on latency by a lot. Compared to cloud computing, which has a latency of 500 to 1,000 milliseconds, edge computing has a latency of 100 to 200 milliseconds. That’s four to five times faster. For things like self-driving cars or safety systems in factories, those milliseconds can mean the difference between avoiding an accident and a disaster. Research shows that edge AI can cut latency by as much as 45% in real-time analytics apps.

- Your data stays private.When you process data on your own device, it never leaves. Your face unlock data, voice commands, and health metrics all stay on your device unless you choose to share them. This is very important for following rules like GDPR and HIPAA. Healthcare facilities can use medical devices to process patient data while still following privacy rules.

- Dependability: Works without the internetYou don’t need an internet connection to use Edge AI. Even when your phone is in airplane mode, you can still unlock it with your face. Even if the WiFi goes down, security cameras keep an eye on things. Industrial sensors keep checking on the health of equipment in places where there is no internet access. This level of resilience is very important for mission-critical apps.

- Saving Money: Bandwidth Isn’t FreeEdge AI cuts down on bandwidth use by a lot. Cameras only send clips to the cloud when something important happens, not hours of security footage. IoT devices don’t send all of their sensor data; instead, they filter it locally and only send summaries. Companies say that moving AI to the edge has cut data transmissions by 70% to 80%, which means lower cloud costs right away. One oilfield services company cut down on data transmissions by 80%, which lowered the cost of satellite communication. Edge processing can cut infrastructure costs by more than 90% in extreme cases, like high-definition computer vision.

- Greener AI: Energy EfficiencyCloud AI needs huge data centers that use a lot of energy. More than 4% of the electricity used in the US in 2023 came from data centers. It takes as much energy to charge a smartphone as it does to ask a few questions of a large language model. Edge AI uses less energy because it doesn’t send data and uses special low-power chips. Accessing the cloud uses 30–40% more energy than edge computing. Research has demonstrated that edge AI can substantially enhance the battery longevity of low-power IoT devices.

6. The Problems: It’s Not All Sunshine

Edge AI isn’t perfect, of course. There are real problems that developers and businesses have to deal with.

Limitations of Hardware: Edge devices don’t have as much processing power, memory, or storage as cloud servers do. You can’t run the same models on a microcontroller with 256 KB of RAM as you can on cloud infrastructure. This makes it hard to choose between model complexity and accuracy. To get the best performance out of hardware, developers need to carefully optimize models.

Size of the model vs. accuracy: It’s always hard to find the right balance between making models small enough to run on edge devices and keeping them accurate enough to be useful. Too much pruning or quantization can make aggressive optimization so bad that the model isn’t reliable anymore. It takes skill and repeated testing to find that sweet spot.

Using Power: Running AI models takes a lot of computing power, which can quickly drain batteries. It is always hard to design hardware that uses less power and make AI models work better without sacrificing performance. Dynamic Voltage and Frequency Scaling (DVFS) and adaptive power management are helpful, but battery life is still a problem for edge devices that run on batteries.

Updates and upkeep: Edge AI systems are often spread out over millions or thousands of devices. Updating models on that scale is hard to do logistically. How do you send a new model to a million security cameras without making them go down? This calls for strong MLOps practices and ways to deploy them. Businesses need systems that let them control versions, update software over the air, and roll back changes.

Concerns about security: Edge devices are easier to get to than cloud servers in secure data centers. They can be changed and attacked in their own area. To protect against threats, modern edge AI systems use secure enclaves, tamper-detection mechanisms, and secure boot processes. But security is still a big worry as more and more devices come out.

7. Case Study: Successful Traffic Management in a Smart City

Let’s look at a real-world example of how Edge AI is used and what it does.

A big city was having a hard time with bad air quality, heavy traffic, and long commutes. Old traffic light systems used fixed timers that couldn’t change based on what was happening at the time.

The Answer

The city put in place a real-time traffic analysis system that used edge AI. Instead of sending everything to a central server, the solution used edge AI to look at traffic images and sensor data right at the intersections. Computer vision, time-series analysis, and predictive modeling are all advanced machine learning techniques that were used directly on edge devices at traffic lights.

The Outcomes

The effect was big and easy to see:

20% less traffic congestion in the areas being watched

15% better air quality because there is less idling and traffic flows more smoothly

Real-time responsiveness that wasn’t possible with processing in the cloud

Less money spent on infrastructure because raw video footage didn’t have to be sent

The solution worked well and is now being looked at for use in other cities around the world. Edge AI’s ability to process data locally was the key to getting these results. If the data had to go through the cloud, real-time traffic optimization would not have been possible.

This case study shows how edge AI can help in real ways, such as by improving results, saving money, and helping the environment.

8. The Future: What Will Happen to Edge AI

Edge AI is changing quickly. This is what will happen next.

Neuromorphic Computing: Chips That Work Like Brains

Neuromorphic chips work like human brains do, using events and spikes instead of continuous processing. These chips promise big improvements in speed for recognizing patterns and making decisions in real time. Companies like BrainChip are making neuromorphic edge processors that use power in ways that have never been possible before. Gartner says that traditional computing will hit a “digital wall” in 2025, which will force a move to neuromorphic and other new architectures.

The connection between 5G and Edge AI

New edge AI architectures are possible with 5G networks that have very low latency. With multi-access edge computing (MEC), cell towers get cloud-like resources with edge-like latency. This mix makes it possible for smart city apps to coordinate thousands of sensors in real time and for industrial IoT deployments to grow without being limited by infrastructure. In the future, 6G will directly connect AI to the network architecture.

TinyML: AI on the Smallest Gadgets

TinyML lets microcontrollers and other very low-power devices use machine learning. These devices use coin-cell batteries that last for years. Wearable health devices, smart agriculture sensors, and environmental monitoring are some of the most important uses. TinyML is speeding up the use of edge AI solutions that are secure, scalable, and responsive in all industries.

Systems that combine edge and cloud

The future isn’t just edge or just cloud; it’s a mix of the two. For speed and privacy, simple, regular tasks run on the edge. The cloud’s computing power can be used for difficult, one-time tasks. For instance, a voice assistant processes wake words on the device but sends complicated natural language questions to the cloud. A medical device does initial screening on site but sends data to a central location for more in-depth analysis when necessary. Studies show that hybrid systems can cut latency by as much as 84% without losing accuracy.

Growth of the market

The numbers speak for themselves. In 2024, the global edge AI market was worth $21.19 billion. By 2034, it is expected to be worth $143.06 billion, growing at a rate of 24–28% per year. Edge AI software is expected to go from $2.40 billion in 2025 to $8.89 billion by 2031. The market for automotive edge AI is growing at a rate of 22.9% per year. 91% of companies think that processing data locally gives them an edge over their competitors, but 74% have trouble scaling AI well. Companies that get edge AI right have a huge chance to fill that gap.

9. How to Get Started with Edge AI: Useful Tips

Want to learn how to use Edge AI? This is how to start.

Pick the Right Framework: Begin with the skills your team already has. TensorFlow Lite is the best option if you use TensorFlow. People who make PyTorch should check out PyTorch Mobile or AI Edge Torch. Think about ONNX Runtime for cross-platform flexibility.

Make Your Models Better: To make your model smaller, use quantization, pruning, and knowledge distillation. Post-training quantization is the easiest to do, so start there. If accuracy drops too much, try training that is aware of quantization. A good rule of thumb is to start with 30% pruning and then change it little by little.

Choose the Right Hardware: Make sure your hardware fits your needs:

Microcontrollers (STM32, NXP) for simple tasks like recognizing gestures

Processors for phones and tablets that are portable (Snapdragon, Apple Silicon)

Edge AI accelerators like Jetson Nano, Edge TPU, and Intel Movidius speed up computer vision and complicated inference.

FPGAs and ASICs for custom, high-efficiency installations

Test in Real Life: Don’t only test in a simulation. Use real-world data and run your models on real target hardware. Test for edge cases, like when the lighting changes, the sensor data is noisy, or the sensor data is too close to the edge. Profile performance metrics like latency, throughput, memory usage, and power use.

Set up MLOps for the Edge: Make pipelines for data preprocessing, training, compression, and deployment that can be used again and again. You can use Amazon SageMaker Neo, TensorFlow Lite converter, or Edge Impulse. Plan your deployment strategy ahead of time, whether it will be fully on-device, cloud offloading, or a mix of the two.

Begin Small: Start with a simple proof of concept. Choose a use case that is clear and has clear success metrics. Before you take on more projects, learn from that first one. The EdgeAI for Beginners course from Microsoft covers everything from the basics to deploying a production-ready application in 36 to 45 hours.

10. The Bottom Line: Return on Investment and Business Impact

Let’s talk about money. Does Edge AI really help businesses?

Yes, the answer is a loud yes. Research shows that AI can cut costs by up to 40% in many industries by automating tasks and making them more efficient. Edge AI specifically gives:

Visual Breakdown of Cost Reduction (Estimated)

| Category | Reduction/Gain |

| Running Costs | 20% Less |

| Labor Costs | 30% Less |

| Revenue | 5–10% More |

| Data Cloud Costs | 70–80% Less |

Manufacturing has some of the clearest returns on investment. Edge AI-powered predictive maintenance cuts down on unplanned downtime by 40%, which saves millions in lost productivity. Retail can manage its inventory better and get real-time analytics without having to pay a lot of money for cloud services. Healthcare gets faster diagnoses and better patient monitoring while still following privacy rules.

Many businesses say that edge AI solutions pay for themselves by lowering operating costs (through things like less bandwidth use, more efficient labor, and longer asset life) and preventing incidents. The competitive edge—faster decision-making, better privacy, and operational resilience—adds even more value that is hard to measure.

In conclusion, the Edge AI Revolution has begun.

Edge AI is not coming; it is already here. It’s opening your phone, keeping an eye on your heart, checking products in factories, keeping an eye on traffic in cities, and keeping your home safe. It’s making our gadgets smarter, faster, safer, and more private.

The technology has grown up and is no longer in the testing stage. The frameworks are in place, the hardware is ready, and the use cases have been tested. The global market is growing by 25% each year because companies are seeing real results.

But we’re still in the first few innings. Neuromorphic computing promises to make things even more efficient. 5G and eventually 6G will make edge AI apps possible that we can’t even think of yet. Billions of battery-powered sensors will be able to think thanks to TinyML.

In the next ten years, Edge AI will change many industries. Companies and developers who learn how to use it now will have a big edge.

Edge AI is worth learning about whether you’re a developer trying to improve your first model, a business leader looking at edge solutions, or just someone who wants to know more about the technology in your pocket. It’s not just the future of computers. It’s now, and it’s only getting stronger.

FAQs

1. What is the main difference between Edge AI and Cloud AI?

Edge AI works with data on devices (like your phone or a security camera), while Cloud AI sends data to servers far away for processing. Edge AI is faster (100–200 ms vs. 500–1000 ms latency), more private (data never leaves your device), and can work without an internet connection. Cloud AI has more processing power and is easier to update, but it needs to be connected to the internet all the time and raises privacy concerns.

2. Do I need special hardware to use Edge AI models?

It depends on how you plan to use it. Neural Processing Units (NPUs) are already built into modern smartphones to run edge AI quickly. If you want to make your own applications, you could use Google’s Edge TPU, NVIDIA Jetson devices, or Qualcomm’s AI Engine as dedicated edge AI accelerators. Microcontrollers can handle simple tasks, but more powerful processors are needed for more complex computer vision tasks.

3. How do you make an AI model smaller for edge deployment without losing accuracy?

Quantization (changing 32-bit floats to 8-bit integers to make them smaller by 4x), pruning (cutting 30–50% of the model by removing unimportant neural network connections), and knowledge distillation (training a smaller “student” model to copy a larger “teacher”) are the three main methods. When used carefully, these methods can cut the size of a model by a lot without losing much accuracy—usually only 1% to 3%.

4. Is Edge AI safe? What about keeping data safe?

It is safer to use edge AI than cloud AI because the data stays on your device instead of being sent over networks where it could be intercepted. But people can get to edge devices and mess with them. To keep attacks from happening, modern edge AI systems use secure enclaves, encryption, tamper detection, and secure boot processes. Edge processing is often the best choice for apps that need to protect privacy, like healthcare and finance, because the data never leaves the local environment.

5. Which industries get the most out of Edge AI, and why?

While staying HIPAA-compliant, healthcare can use real-time diagnostics and patient monitoring. Manufacturers use edge AI for quality control and predictive maintenance, which cuts downtime by 40%. ADAS and self-driving cars need edge processing to make decisions in a split second. Smart cities use it to keep traffic moving (20% less congestion) and to keep people safe. Retail uses edge AI to keep track of its inventory and learn more about its customers. In short, any field that needs real-time processing, data privacy, or offline operation gets a lot out of it.