Implementing Responsible AI from Day One: A Comprehensive Framework for Building Trustworthy AI Systems

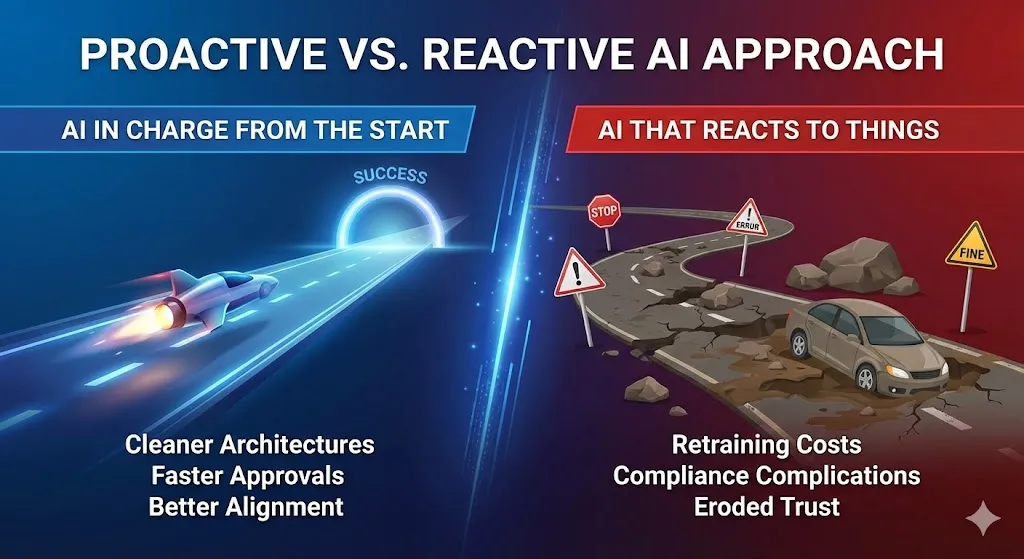

A lot of companies want to employ AI solutions right soon, but they do it in a way that makes them uneasy: they build up AI systems first and then decide who is in control. We know this way of doing things is bad because it has cost us money, caused consumers lose faith in us, and gotten us in trouble with the police. The second choice is to employ AI properly from the beginning. This is the correct thing to do, and it also gives you an edge over your competition that speeds up deployment, decreases risk, and adds actual economic value.

This shift is a huge step forward in how we construct AI. Smart organizations know that being responsible doesn’t stop them from moving forward. They don’t see it that way; they see it as the base for AI that will last and flourish. Building ethical AI from the bottom up can help you gain cleaner architectures, faster approvals, better alignment with stakeholders, and solutions that operate better in the real world.

What does it mean for AI to be responsible? Not Just Following Orders

Responsible AI includes creating, using, and running AI systems in ways that are in conformity with laws, morals, and social norms. It also means lowering the chance of getting hurt or having an accident. It’s not the same as compliance, although one possible outcome is compliance.

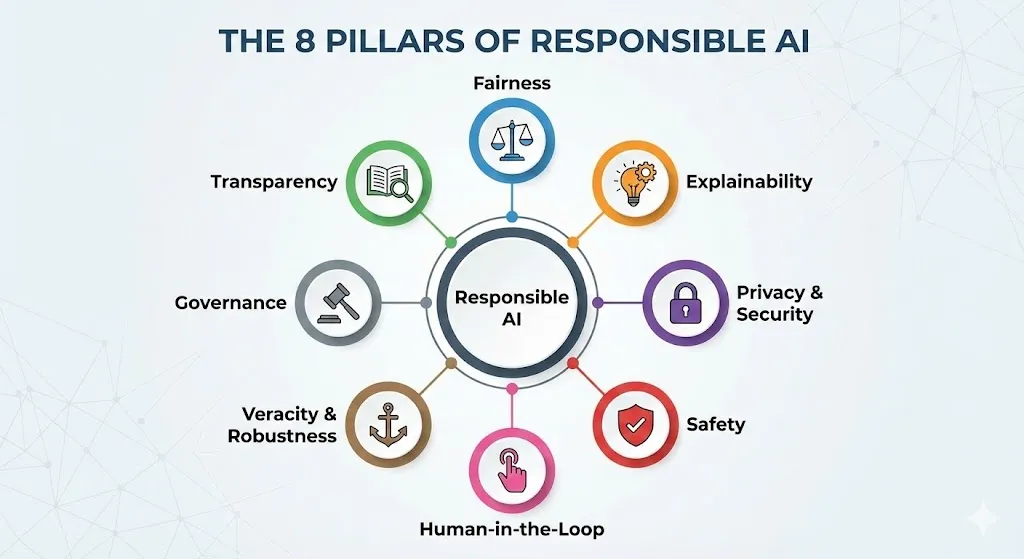

When we talk about responsible AI, we mean that there are eight primary areas that work together to develop systems that people can trust:

1. Fairness

Fairness makes guarantee that AI doesn’t make decisions that affect one group more than another. This entails looking at the training data to make sure it has a healthy mix of people from different backgrounds. It also requires utilizing statistical fairness criteria like demographic parity and equal opportunity, and evaluating models across different demographic groups on a regular basis to make sure they don’t discriminate.

2. Explainability

Explainability is figuring out how AI systems make choices. Shapley values and LIME (Local Interpretable Model-agnostic Explanations) are two techniques to teach humans how AI makes choices. This provides them an opportunity to look over, fix, and make models better before they are used.

3. Privacy and Security

Privacy and Security make ensuring that AI models and data are kept, used, and managed in the right way. This isn’t simply about writing code to save data. It also provides rules for who can access it, a safe place to keep models, and security from attacks that could destroy the system’s integrity.

4. Safety

Safety is highly important when it comes to keeping people, communities, and the environment safe from things that shouldn’t happen. This involves having strong protections, comprehensive testing, rules for how to handle problems, and measures to make sure that people are in charge of making crucial decisions.

5. Human-in-the-Loop

AI systems can make decisions that are far different from what people want. This keeps people in charge and stops AI from doing things that people can’t understand or control.

6. Veracity and Robustness

Veracity and Robustness are adjectives that inform you how strong, accurate, and dependable a system is. This entails verifying sure the model is correct, dealing with edge circumstances, searching for model drift in production environments, and keeping an eye on performance to make sure it stays in line with what was planned.

7. Governance

Governance makes sure that AI is produced and utilized in a way that is both legal and moral by setting rules, norms, and checks. Governance is anything that has to do with keeping records, making decisions, and addressing problems.

8. Transparency

AI systems should be open about how they were built, where they acquired their data, how they work, and what they can and can’t do. When AI systems are open, people who care about them can choose whether or not to use them and how to do so.

Key Takeaway: The Responsible AI Framework has eight basic parts.

The Business Case for Responsibility: Why This Is Important Right Now

We recognize that acting fast is vital. It’s really crucial to get goods to market promptly. Every day you wait could cost you money and offer your competitors an edge. Companies that apply ethical AI methods from the beginning, on the other hand, say they actually progress faster, not slower. They make better choices and have models that are ready for production and can be readily expanded.

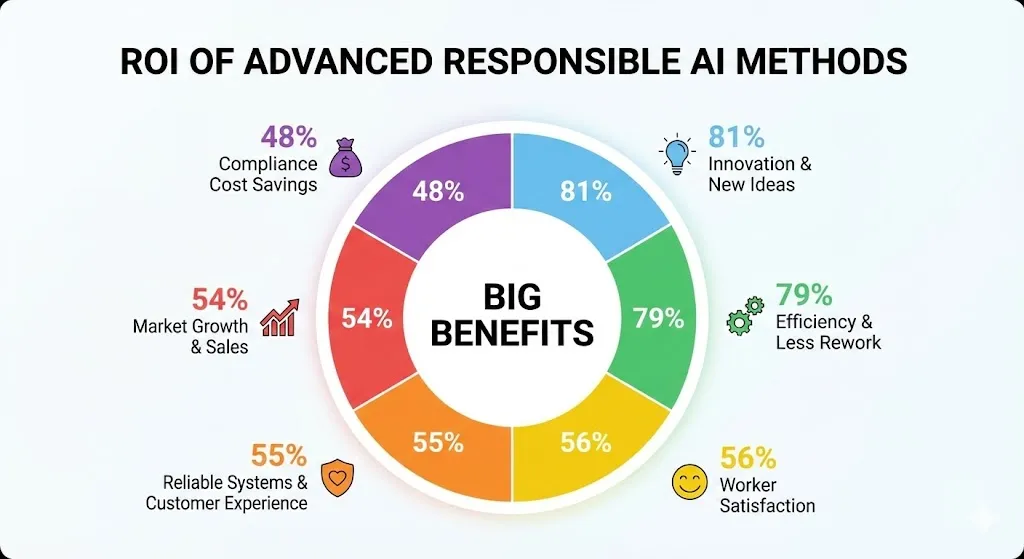

ROI and Performance Statistics

The figures convey a powerful story. Companies who adopt advanced responsible AI methods claim they get a lot out of them:

It’s easy to understand how the ROI works:

To avoid fees, you need to remain out of trouble with the law. The EU AI Act says that fines might be as high as €35 million or 7% of a company’s global yearly revenues. It also keeps you from having to recall models, go to court, or deal with problems at work.

When systems are ready for an audit, it’s easier to make adjustments swiftly. This speeds things up and lowers the amount of rework, failures, and technical debt.

Time-to-Market Acceleration speeds up deployment by cutting down on the number of approval loops, getting everyone on the same page faster, and making sure systems are ready for governance from the start.

Brand and Trust Capital keep your business robust and honest even when AI doesn’t work. This helps you keep your consumers and stay in your market.

Innovation Leverage gives departments a secure, compliant framework for ethical experimentation, which allows them work together and boards trust AI projects.

A Warning: Why Reacting Doesn’t Work

Business leaders believe their organizations aren’t ready to utilize responsible AI in the real world, even though they realize how vital it is. Only 6% are completely functional, and fewer than 1% are innovators. The contrast between what people wish to do and what they actually do reveals that late-stage responsibility programs don’t work well because of how they are set up.

Putting extra responsibility on systems that are currently in use makes things more harder. It costs a lot of money to retrain models that were trained on data that wasn’t fair. Putting in governance mechanisms too rapidly can pose complications. Making systems more secure makes them less easy to use. It’s impossible to tell how choices were made in records. After you break someone’s trust, it can take a long time to get it back.

The Eight Main Dimensions in the Real World

If you want these eight aspects to become genuine processes instead of just ideas, you need to start taking responsibility right away.

Fairness Implementation: is a healthcare firm that creates AI for diagnostic suggestions. They don’t merely use old data to train their model. They also check for fairness by making sure that the training data contains patients from a variety of backgrounds and testing the ideas on people of varied ages, genders, and socioeconomic backgrounds.

Explainability: A customer of AWS who is using AI to help with loan approval uses Amazon SageMaker Clarify from the beginning. This helps data scientists test how changes to features affect predictions before they are utilized in the actual world.

Privacy and Security: To keep privacy and security safe, a financial services organization encrypts training data, applies role-based access controls, and checks model access logs.

Safety: A business that builds AI chatbots to aid people with their problems delivers hard situations to human agents without the clients having to ask for them. This helps people make important decisions.

Veracity: teams that utilize AWS SageMaker Model Monitor set up continuous performance tracking from the first day they put it into use. This automatically discovers model drift and starts retraining procedures.

Governance: Governance makes it apparent who is in charge of making sure that models operate, that fairness assessments are done, and that security is followed.

Transparency: AI system documentation offers information about the training data, decisions regarding the model architecture, and recognized limitations.

Data Minimization: Businesses use data minimization to only collect the information they need for certain tasks in order to respect people’s privacy.

Five Useful Steps: From Planning to Doing

We need to prepare and do responsible AI so that it can become a reality. Here are five things you can do to uphold your promise:

Step 1: Look at the AI systems you already have.

First, write down all the AI systems that are already in existence or are being constructed. Make a list of what each system does, the data it utilizes, the people it impacts, the decisions it takes, and the risks it potentially pose.

Step 2: Figure out how things will work and who will be in control.

If people aren’t held accountable, governance is just a show. Set up an AI governance committee with specific tasks, but don’t allow committees get in the way of development.

Step 3: Make norms and moral standards

If you can’t employ your principles, they aren’t helpful. Make guidelines that turn huge promises into useful advice.

Step 4: Plan to watch and measure items.

The government should keep an eye on things. Companies that don’t review their data in real time are 34% less likely to make more money and 65% less likely to save money.

Step 5: Always try to get better

When an AI program stops changing, that’s when it’s most dangerous. AI systems have to deal with rules, data, and societal conventions that are continually evolving.

Being Responsible: Real-Life Case Studies

Case Study 1: Microsoft’s AI for Clinical Documentation and Stanford Medicine

All of the people who worked at Stanford Medicine used Nuance Dragon Ambient eXperience Copilot (DAX Copilot). This AI system can listen to clinical encounters and take notes on its own.

Case Study 2: How to utilize AI in a smart way to improve healthcare

The askNivi group built a chatbot that employed retrieval to help people in places with little resources find health care information. They didn’t think of responsibility as something to do after deployment; instead, they made it a part of the full development process.

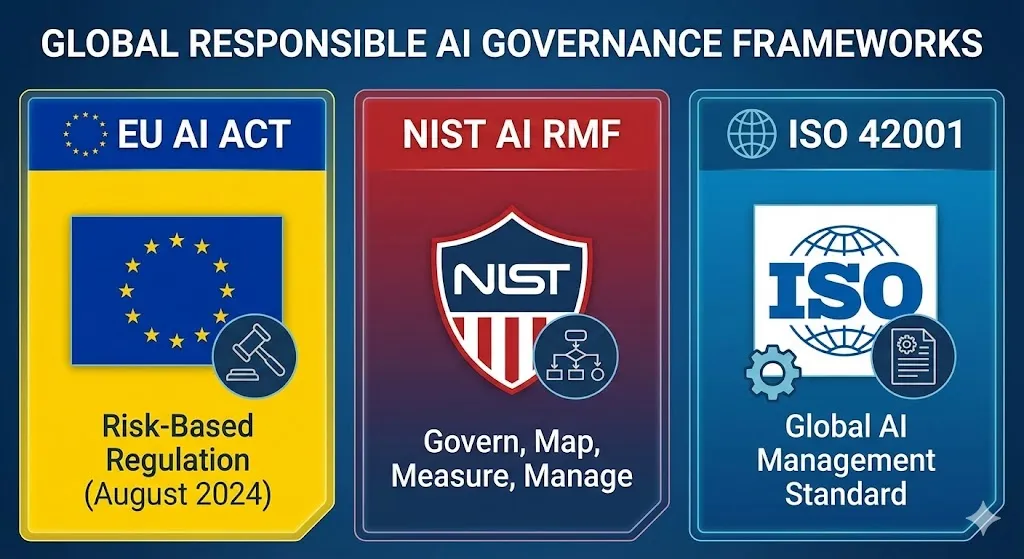

Global Rules That Decide How Things Are Done: Frameworks for Responsible AI Governance

The EU AI Act

The EU AI Act, which goes into effect on August 1, 2024, specifies that all four degrees of risk must follow the law:

Unacceptable Risk: Social scoring (Banned).

High-Risk AI: Needs risk assessments and human oversight.

Low Risk: Requires transparency (notifying users they are talking to AI).

Minimal Risk: General use.

The NIST AI Risk Management Framework

The National Institute of Standards and Technology helps people from all around the world with the U.S. way to handle AI risks through four steps: Govern, Map, Measure, and Manage.

ISO 42001: The Global Standard for AI Management

ISO 42001 is known around the world as a way to manage AI. Getting certified shows that a business follows it regarding data, models, and evaluating performance.

The Next Step: Fixing Problems with Implementation

Most companies know they should be responsible, but they don’t always do what they say they would. Once you know what these issues are, you can come up with strategic ways to fix them:

Silos in operations and gaps in governance.

Not enough knowledge and skills.

AI that runs in the background (Shadow AI).

Problems with accessing and using data.

Five Easy Rules to Follow Every Day

Being nice and fair to everyone: AI systems shouldn’t choose one group above another.

Communication that is straightforward: People need to know how AI systems work.

Responsibility: Companies need to know who is in control.

Security and privacy: Keep private information safe at every step.

Safety and dependability: AI systems need to work correctly and not unintentionally hurt people.

Questions that people often have

Q-1: What is the truth about how being responsible can make AI use go slower? A: This is likely the most harmful myth about AI that is true. Taking charge actually speeds up deployment.

Q-2: What is the first thing companies should do when they build AI systems that are safe? A: Make sure everyone knows what they need to do and set up clear regulations.

Q-3: Is responsible AI exclusively for big firms, or can small enterprises utilize it too? A: Responsible AI methods can help businesses of all sizes.

Q-4: How do we strike a balance between being responsible and coming up with new ideas quickly? A: This isn’t a true choice. Being responsible doesn’t impede fresh ideas; it makes them happen in the long term.

Q-5: What is the difference between obeying the rules and employing AI in a safe way? A: AI can’t be accountable just because it respects the rules. Being responsible means more than just following orders.

Conclusion: The Need for Responsibility from the Start

We need to change the way we use AI right now. “Move fast and break things” isn’t a thing anymore. When AI systems fail, they damage trust, hurt communities, and break the law. Companies that make accountability a part of their culture from the start establish systems that function faster, grow with greater confidence, and give everyone engaged real benefit.

Being responsibly with AI doesn’t imply slowing down progress or blocking new ideas. It’s about making AI systems that people can trust, that are in keeping with the firm’s principles, that perform safely and fairly, and that provide the company a long-term edge over its competitors. Companies that make or utilize AI have to choose between adding responsibility after problems happen or making it part of the system from the start.

The proof is clear. Companies that utilize advanced ethical AI practices have superior benefits, such faster revenue growth, more effective operations, happier customers and workers, and less risk in their operations. This is the right thing to do, and it’s also a wise business move at a time when trust is becoming more and more crucial.

From the beginning, we want people who are leaders in technology, business, and all levels of organizations to be in charge. Make the rules explicit, hold individuals accountable, develop operational procedures, keep an eye on things all the time, and foster cultures of ongoing improvement. Companies who accomplish this will be the best in their sectors, hire the best staff, acquire their consumers’ trust, and apply AI to make things better.