Introduction to the Generative AI Revolution in 3D Worlds

You are in the right place, especially if you are a developer, game creator, or just curious about how virtual worlds are created. This article dissects all that you need to know about AI-driven 3D asset generation, such as the technology behind it and the tools you can begin to use today. We will discuss how companies such as NVIDIA, Meta, and Shutterstock are transforming the game and what this implies for the future of the metaverse.

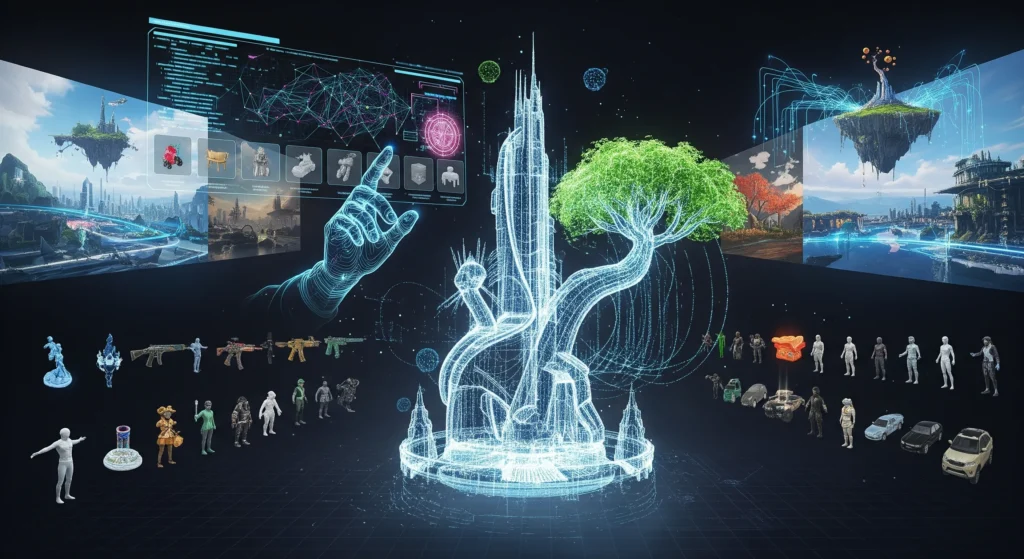

What is Generative AI of 3D Worlds?

Let’s start simple. Generative AI for 3D worlds refers to generative artificial intelligence (AI) systems that are capable of automatically generating three-dimensional (3D) digital objects, characters, environments, and whole virtual spaces. You no longer need to employ a team of 3D artists to hand-sculpt each tree, building, or character, but can now tell the AI what you want in plain English—even provide a picture—and have it created on your behalf.

Imagine it this way: recollect that we used to make pictures by hand, only? Then came cameras. Then photo editing software. We now have AI that can create novel images independently. This same evolution is taking place in 3D.

The metaverse—the interdependent virtual worlds in which individuals socialize, play games, work, and shop—requires large volumes of content. It is no longer viable to make all that content the old-fashioned way. And here is where generative AI comes in as the final productivity tool.

Why This Matters Right Now

The timing couldn’t be better. The metaverse generative AI market is booming all over the world. It is estimated to be worth approximately $40 million in 2023. By 2033, analysts estimate it will reach an astronomical $611 million—that is an increase of more than 31 percent annually.

Projected Growth of the Metaverse Generative AI Market

| Year | Market Value (Millions USD) | Annual Growth Rate (%) |

| 2023 | 40 | – |

| 2033 | 611 | 31+ |

Yet, here is where it gets really interesting: conventional 3D modeling is challenging. Software such as Blender or Maya takes years to learn. You should get to know such intricate terms as UV mapping, polygon topology, and physically-based rendering. Using AI tools, a person with no previous 3D experience can produce production-quality assets in only a few minutes.

Not only is that convenient, it is democratizing. It implies that indie game makers, small corporations, and creative people can compete with large studios.

The Magic: The Technology

The question is how this works. It is time to lift the hood but not to be overly technical.

Text-to-3D Generation

Text-to-3D is the most popular one at the moment. You enter a query such as “a medieval wooden table with elaborate carvings” and the AI renders you a 3D model of such a table.

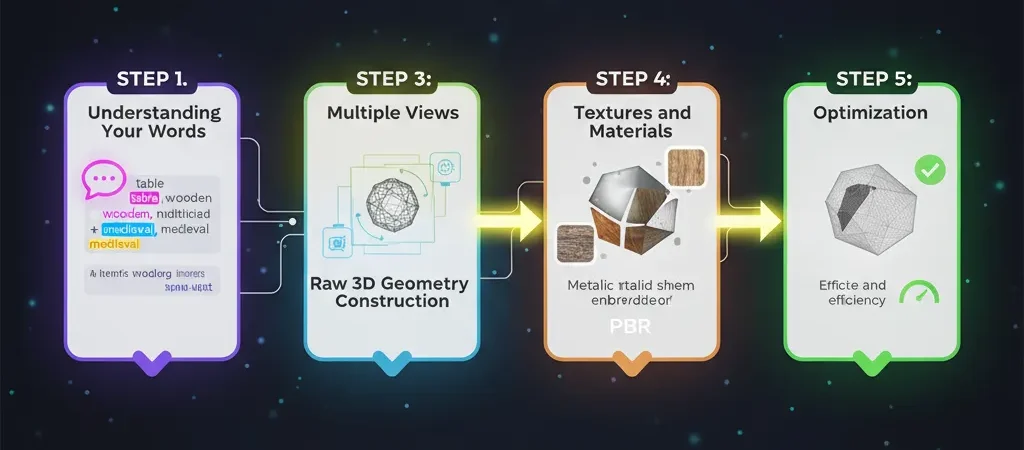

The simplified process is as below:

Step 1: Understanding Your Words

The AI uses natural language processing (NLP) to understand what to do. It determines significant objects (table), substances (wooden), styles (medieval), and characteristics (intricate carvings).

Step 2: Multiple Views

Advanced diffusion models create many 2D images of your object from various angles. This makes the end 3D model appear nice in all directions.

Step 3: 3D Geometry Construction

An AI involves methods such as signed distance functions (SDFs) or neural radiance fields (NeRFs) as the means of creating the actual 3D form. Imagine it as carving in digital clay, except that it works automatically.

Step 4: Textures and MaterialsSet featured image

The system uses real-world textures, colors, and material characteristics such as the look of something being metallic or matte. This involves the use of materials referred to as physically-based rendering (PBR).

Step 5: Optimization

Lastly, the model is optimized to ensure that it performs well in game engines and can run well on various machines.

The Artificial Intelligence Bots Driving this Revolution

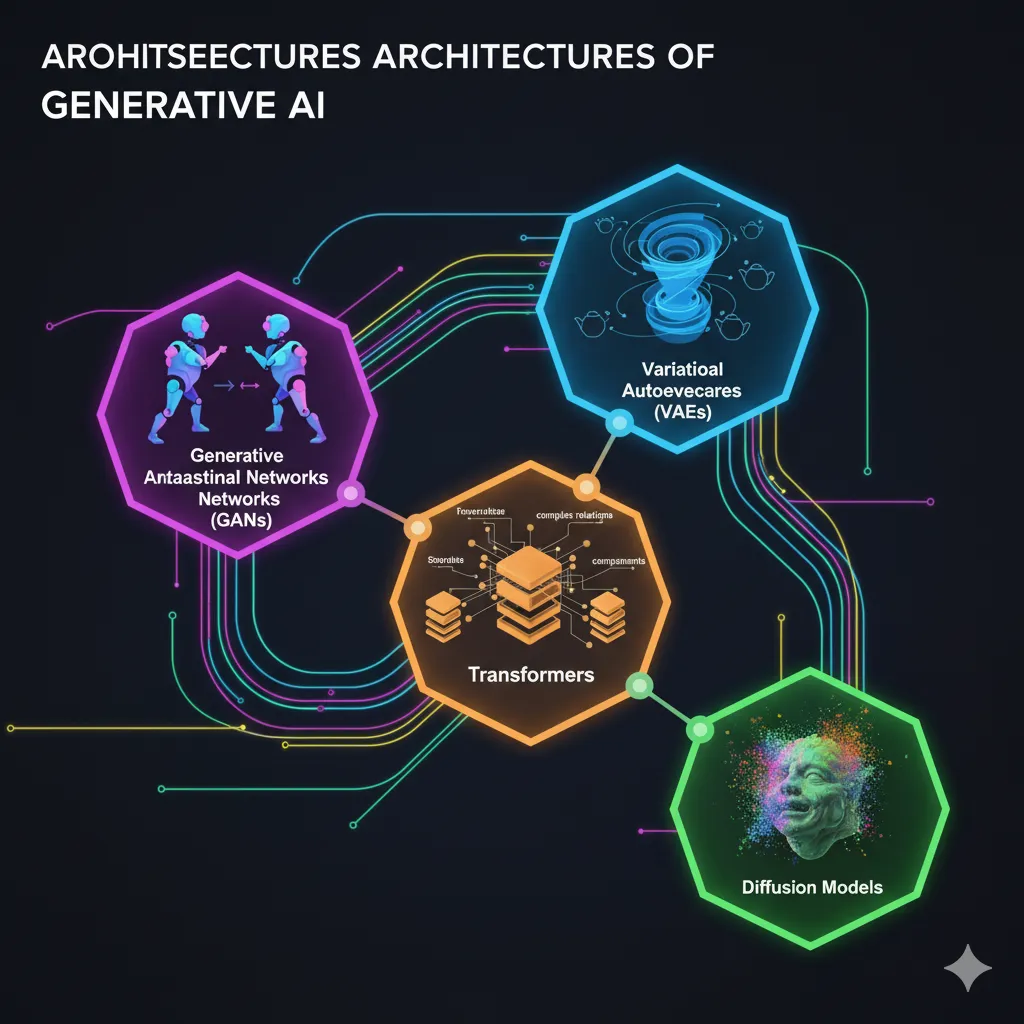

This is made possible by a number of AI architectures:

Generative Adversarial Networks (GANs): These systems are made of two competing AI systems. One designs 3D models and the other evaluates the models. It is through this competition that the quality continues to improve.

Variational Autoencoders (VAEs): These are trained to shrink objects in 3D into simple codes and re-create them with variations.

Diffusion Models: The most popular at the moment. Their beginnings are random noise, which is gradually honed into a meticulous 3D object. Imagine it as an artist beginning with crude drawings and elaborating them as they go.

Transformers: The same technology that enables ChatGPT is now applied to 3D generation, which aids the AI in comprehending complex relations between various components of an item.

The Large Powers and their Instruments

NVIDIA: Leading the Charge

NVIDIA has been pulverizing it with a number of ground-breaking technologies in this space.

Their major splash was GET3D. Published in 2022, GET3D creates 3D textured shapes directly out of 2D images. What makes it special? It generates meshes of correct topology, i.e., the 3D models are not only pretty to view but are in fact useful in applications and in games.

In a single NVIDIA card, GET3D is capable of generating 20 shapes in a second. It is madness considering the fact that a single asset in traditional modeling could take hours.

Their more recent and stronger system is NVIDIA Edify 3D. Edify 3D, announced in 2024 and refined in 2025, can produce production-ready 3D assets in less than 2 minutes. It generates cleaner and simpler to edit quad meshes, 4K textures, and has built-in physical-based rendering.

What is even more interesting is that Edify is based on a multi-view diffusion method. It can make multiple perspectives of what you desire at various angles and, through a transformer-based reconstruction model, it can integrate these perspectives to create a fully 3D object. This provides uniformity—no strange artifacts when the back does not match the front.

Shutterstock/NVIDIA Partnership

Later in mid-2024, Shutterstock collaborated with NVIDIA and introduced the first ethical generative 3D API. This service is developed as an NVIDIA Edify implementation that is solely trained on the Shutterstock library (more than 500,000 licensed 3D models) and a collection of 650 million images.

Why does this matter? Copyright and ethics. The internet is where many of the AI models are trained without the consent of the data scraped. The strategy at Shutterstock compensates original creators via their Contributor Fund.

The service has text-to-3D and image-to-3D generation. There is the ability to make a preview within 10 seconds, followed by turning it into a high-quality model with PBR materials. It begins with generative credit packs as low as $25, and this is affordable to all businesses.

They also provide the 360-degree HDRi background generator, which is used to synthesize natural lighting conditions in 3D scenes and is ideal to cause your assets to look real in whatever environment you wish.

Meta’s 3D AssetGen

Meta (previously Facebook) has been working on their own version, namely 3D AssetGen. On May 2025, the most recent release was AssetGen 2.0.

AssetGen 2.0 is a two-model method:

Model 1: Geometry Generation: This model uses single-stage 3D diffusion to generate the 3D mesh structure. This creates shapes of small geometric features and regularity.

Model 2: Texture Generation (TextureGen): Introduces high-quality textures that have better view consistency and are higher quality. It solves typical issues such as texture seams and the so-called Janus effect (where features such as faces are incorrectly duplicated).

The quality of materials is the differentiating feature of the approach taken by Meta. AssetGen generates good PBR materials so that relighting can be realistic. The lighting environment can be altered, and the object will react to these changes, e.g., cloth and metallic surfaces reflect differently.

Meta had a 17 percent geometric accuracy and 40 percent perceptual quality improvement over the former techniques. In user tests, individuals favored AssetGen outputs 72 percent of the time compared to other rival services of equal speed.

Other Notable Tools

The market is becoming full of options, and that is fantastic for the users:

Meshy AI: Known for quick generation and photogrammetry texturing. Supports text-to-3D and image-to-3D (PBR). Starting at $20/month.

Tripo AI: Fast platform with skeleton exports to be used in animation and smart low-poly capabilities. Great for game developers. Plans from $29.99 to $199.99/month.

3D AI Studio: Fairly easy to use with options to customize. Good for beginners. Free plan with premium at approximately $20/month.

Luma AI Genie: The best to use with photorealistic texturing and experiment with texturing in AI-generated 3D renditions. Starting at $29.99/month.

Sloyd: Prefers game-ready assets that are procedurally generated with AI. Perfect when used as props and architecture. $15/month.

Real-World Applications

This is not a purely theoretical technology, and in fact, it is already being implemented in various industries.

Gaming and Entertainment

Developers of games are embracing AI 3D generation to fill huge open worlds. They can create variations in bulk rather than individually create hundreds of unique trees, rocks, or buildings.

Sunny Valley Studio showed the application of text prompts to create game assets in real-time. This accelerates the prototyping process and allows small groups to compete with bigger studios.

To create their characters, software may be used to create animated avatars that are rigged (with the skeletal framework required to make them move). What once took days has changed to minutes.

Architecture and Construction

Architects are feeding floor plans or sketches and receiving immediate 3D images. This makes client presentation and design speedy.

One of the architectural companies demonstrated the ability of AI to create the entire exterior of a building based on a basic text description, complete with the right materials and architectural styles.

E-commerce and Product Visualization

Image-to-3D workflows are being used to make realistic product models in virtual showrooms and augmented reality shopping experiences and are being adopted by retailers.

Think about taking a picture of a product once and then letting AI create an ideal 3D model that will allow customers to see every detail of a product or put it in their home with the help of AR. That’s happening today.

Film and Virtual Production

Technologies such as The Mandalorian were the first to use real-time virtual production, which AI is expanding upon. Directors can now order around a background or a set piece and see it generated on the fly, and this saved post-production time by various folds.

Metaverse Development

Such platforms as Decentraland and The Sandbox require enormous content. The use of generative AI allows the generation of various 3D resources in large numbers.

Play-to-earn games are designed with AI to produce rare and one-of-a-kind items and collectibles in the form of NFTs, and grant owners optimal ownership of these items due to their digital nature.

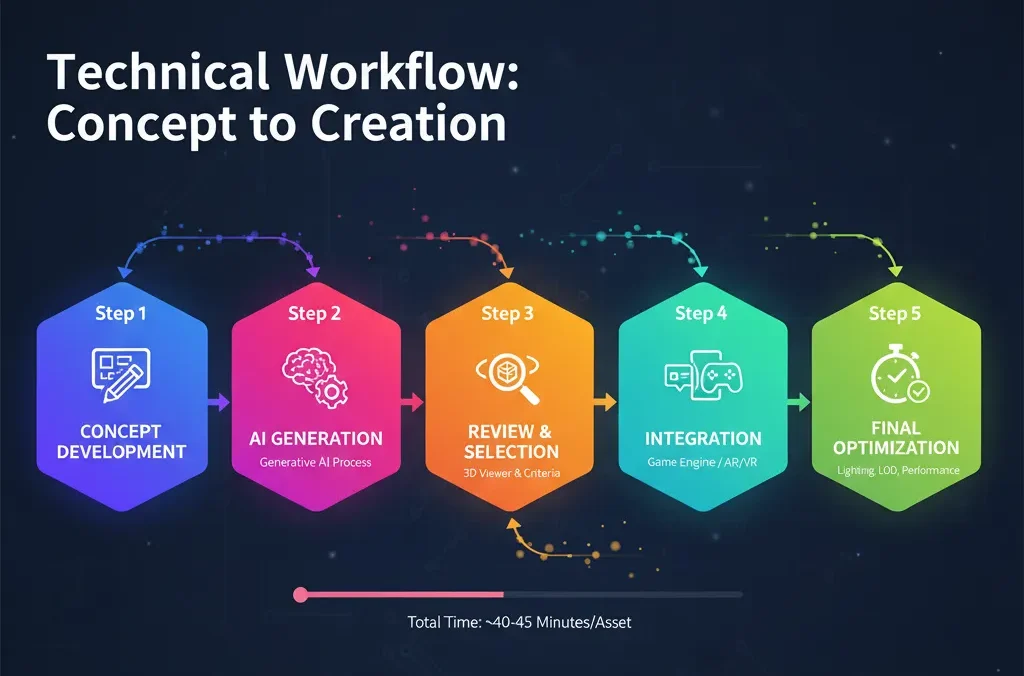

The Technical Workflow: From Concept to Creation

To have a practical project, we will take a tour of the normal workflow of AI 3D generation.

Step-by-Step AI 3D Generation Workflow

Concept Development (5 minutes): Define what you need. Design mood boards or reference images. Establish technical requirements such as polygon count (for game performance) and art style.

AI Generation (10 minutes): Turn 3-4 variations using your chosen tool. Enable PBR textures. Export in your desired format (GLB, OBJ, FBX, USD).

Review and Selection (5 minutes): Preview models in a 3D viewer. Make sure it fits into your style of project. Confirm technical requirements. Choose the most appropriate version or regenerate if required.

Integration (15 minutes): Export to your game engine (Unity, Unreal). Introduce adjustments of material. Configure LOD (Level of Detail) to optimize performance.

Final Optimization (5-10 minutes): Adjust to the exact lighting. Add any special effects. Set collision meshes where necessary.

Total time: approximately 40-45 minutes per asset. That is in comparison to hours or days that traditional methods take.

Training AI for 3D Models

Have you ever wondered how these artificial intelligence systems get to know how to create 3D content? The process is fascinating.

Data Collection

To begin with, you should have huge quantities of 3D models. Popular sources include:

ShapeNet: Thousands of categorized 3D models.

ModelNet: This is another large training dataset.

Premium proprietary data: Shutterstock as well as other companies have their own licensed libraries.

The final results depend on the quality and quantity of the training data. Hundreds of thousands or millions of 3D models are trained on most professional systems.

Data Preparation

Models must be standardized:

Uniform file formats (OBJ, STL, etc.).

Normalized scale and orientation.

Well-labeled metadata.

Data augmentation (making variations).

Selection of Architecture Model

Programmers select the appropriate AI model:

High quality, realistic outputs that use GANs.

Variation generation VAEs.

State-of-the-art quality diffusion models.

Transformers for cognizing difficult relationships.

Training Process

It is very computationally intensive training. The contemporary diffusion models may require:

Hundreds of thousands to millions of 3D models as training data.

Weeks of computation time on powerful GPUs.

Specialized software frameworks (e.g., PyTorch, TensorFlow).

The Power of Hybrid Approaches: AI + Procedural Generation

Procedural generation is an algorithmic generation of content with rules. This has been in use in games such as Minecraft to create infinite worlds. Combining AI with it, you get the best of both worlds: the diversity and scale of procedural systems, as well as the quality and creativeness of AI.

As an illustration, an AI could produce single building styles, whereas procedural algorithms can organize them in complete cities. Or AI generates individual character faces with procedural systems working on the body differences and clothing mixes.

This is a hybrid mode of development for the metaverse.

Challenges and Limitations

This technology may be amazing, but it is not flawless. Let us be honest about the present issues.

Technical Challenges

Striking a balance between quality and performance: Detailed models are beautiful but they slow down games and virtual worlds. It is difficult to strike the right balance.

Cross-platform compatibility: Models must be compatible with VR headsets, smartphones, PCs, and web browsers. They are both different in capabilities.

Coherence and accuracy: AI can produce strange artifacts or fail to do exactly what you were requesting. Different tries may be required to accomplish it.

Processing requirements: AI generation requires powerful hardware, in spite of being faster than manual modeling. Cloud services are also helpful but they come with recurrent expenses.

Ethical and Legal Issues

Copyright issues: Who is the owner of AI-generated content? What of the training data utilized? Such questions are still legal to decide.

Discrimination in AI models: In case the training data is not diverse, the content generated may have those biases. This may continue to propagate virtual worlds’ stereotypes.

Job displacement: Will AI displace 3D artists? The reality is more nuanced. AI is used to deal with monotonous tasks and leave artists to do creative work. However, the industry is certainly evolving.

Privacy of data: Metaverses strip vast data from users. AI should have privacy safeguards in place.

Cost Considerations

Although AI generation is more cost-effective than conventional approaches in general, it has some hidden costs:

Training needs: Employees will be required to be trained on new tools (cost ranges between

10,000−10,000-50,000 to train in each model).

Hardware requirements: The most expensive GPUs are available at a price of up to $10,000 each.

Maintenance and updates: Continuing 10-20 percent of original investment.

Scalability costs: Additional users require additional computing power.

Such cloud-based services as AWS or Google Cloud can be used to distribute these expenses.

The Future: What Will It Be Like in 2026 and Beyond?

Technological change is extraordinary. This is what is forecasted by the experts in the near future.

Real-Time Generation

Take the example of being in a virtual world where new information is generated around you as you go exploring. No wait and no loading screens. On-the-fly, unique environments that are just infinite.

This is being developed by companies such as NVIDIA in their AI Blueprint of 3D object creation. The goal? Automatically populate whole 3D worlds.

Video to 3D

Text and image are terrific, however, what about video? A number of research teams are working on systems which are able to observe a video of an object and produce a 3D model of the entire object.

This would be massive when it comes to capturing things in the real world such as capturing digital twins of real-life spaces.

AI-Powered Entire Worlds

Rather than producing discrete objects, the coming AI will produce entire, coherent worlds. The vision of Meta on AssetGen 2.0 has the autoregressive creation of complete three-dimensional scenes.

Imagine writing about a cyberpunk street at night with its neon lights and rain and having a complete, populated environment, which can be explored within minutes.

Improved Physics and Animations

Existing tools are largely concerned with inanimate objects. The virtual frontier is the AI that cognizes the way things move and interact. Automation of rigging, physical simulation, and dynamism.

Multimodal Integration

Several inputs will be incorporated in AI systems in a seamless manner. You can begin with a drawing, make it a bit more precise by typing, include a picture that serves as a reference, and get what you exactly have in mind.

Edge Computing and Mobile

At the present, most AI 3D generation uses intensive cloud servers. The technology will be more accessible and responsive as future developments can allow processing more on local devices.

Best Practices for Using AI 3D Generation

Desire to optimize these tools? Here are some pro tips:

Prompt Engineering

Do not be too broad or too detailed: “A medieval wooden dining table with carved legs” is better than “table” or a list of detailed explanations.

Mention materials: E.g., wood, metal, plastic, fabric, etc. This assists the AI to use the right textures.

Insert style keywords: Art deco, minimalist, baroque, cyberpunk, etc.—keywords direct the aesthetics.

Give the context: “For a fantasy game” or “product visualization” makes the AI more useful to you.

Quality Control

Create several variants: The majority of the tools generate 3-4 options for each prompt. Pick the best.

Check on all faces: Turn your model in a 3D space to identify the problems before it is brought together.

Test in target environment: Build into your real game engine or application at an early stage to discover compatibility issues.

Iteration and refinements: Start with the result of the output. There may still be the need for manual touch-ups.

Optimization Strategies

Begin with simple: Build low-poly models, then build fine-detail models.

Take LOD systems: Keep more than one version of the same asset at varying levels of detail. Present extensive details in close proximity, simplified ones in distant ones.

Generate in batch: When you require lots of similar items, then generate them together and consistently.

Store libraries of assets: Archive and store successful generations to be used and consulted in the future.

Fitting into the Current Workflows

AI does not substitute the conventional 3D pipelines; it just makes them better.

For Game Development

Protest using AI in rapid prototyping and concept exploration.

Create background props and environmental assets in large numbers.

Make base models, which are refined and customized by artists.

Occupy huge open worlds with diverse content fast creation.

For Product Design

Creating concept model sketches or descriptions.

Design and build numerous options within a short amount of time to be reviewed by clients.

Create photorealistic renders to be used in marketing products.

Create AR try-before-you-buy 3D models.

For Architecture

3D visualizations of 2D floor plans are created immediately.

Create furniture and interior design.

Provide neighborhood and landscape contexts of buildings.

Generate various presentation designs to the client.

For Film and Animation

Produce set pieces and backgrounds.

Design concept models of creatures and characters.

Construct virtual space for previsualization.

Created properties and virtual production in real-time.

The Economics: ROI and Saving Costs

Talking money, that is what counts in the end for businesses.

Conventional and AI Modeling Costs

Traditional 3D modeling:

Junior 3D artist: $25-50/hour

Senior 3D artist: $75-150/hour

One specific asset: 4-20 work hours.

Cost per asset:

$ 3,000

AI 3D generation:

Subscription costs: $15-200/month

Asset time: 30 minutes to 1 hour.

Effective cost per asset: $1-10

Anywhere between 10x and 100x cheaper. In the case of a game with 1,000 unique assets, we are considering saving hundreds of thousands of dollars.

Real-World ROI Examples

In a small indie game studio with AI 3D generation, it was described that:

Produced 100+ magic objects.

9x faster production time.

Over $7,000 in cost savings.

Liberated artists on hero assets and creativity direction.

An architectural firm saw:

40% reduction in design time.

30% increase in productivity.

Capacity to introduce various choices to all customers.

Faster project turnaround.

Scalability Benefits

The magic behind it is the scale:

Small projects (1-50 assets): Middling savings, quicker turnover.

Medium projects (50-500 assets): Large cost saving, allows smaller teams.

Massive projects (500 or more assets): Revolutionary savings, competitive edge.

AI generation becomes compulsory and not optional in cases of metaverse platforms that require millions of assets.

Final Verdict: The Future is Being Constructed Now

We are at a disruptive age of digital content making. Generative AI of 3D worlds is not only making things faster or cheaper; this simply is a paradigm shift of what can be done.

It is now able to create experiences on small teams that would have demanded huge studios. Single designers are able to fill whole worlds of the virtual worlds. The entry barrier to creation in the 3D world has been reduced significantly.

However, it is not about substituting human creativity. The most effective outcomes are achieved when using AI efficiency and human vision. AI does the mundane task of creating hundreds of background props, base models, and environments. Humans are centered on the aesthetic orientation, the hero properties, and the personal touches to the experience that are memorable.

Into the future, especially 2026 and beyond, it can be assumed that these tools will become even more potent, accessible, and integrated into all creative processes. On-the-fly generation, video-to-3D, full-world generation—these are not far off. They are currently being developed.

Generative AI encompassing 3D assets is no longer optional, whether you are a game developer, architect, filmmaker, or an entrepreneur in the world of the metaverse. It’s becoming essential.

The metaverse is being built. AI is offering the means to do it faster, better, and more creatively than ever.

5 Unique FAQs

1. Is it true that generative AI is able to substitute professional 3D artists?

No, but it changes their role. Consider it as in the case of the invention of cameras: photographers did not replace the role of painters, but the nature of these two occupations changed. Repetitive and time-intensive tasks such as generating hundreds of background resources or initial concepts are handled by AI.

The creative direction, the work on refining AI results, the production of hero assets that require the human touch, and artistic consistency are the priorities of professional artists. Research demonstrates that AI is in fact more productive (30-40% more productive) and artists are freed to do higher-value creativity work. Human-AI collaboration yields the greatest results as opposed to replacement.

2. What is the price of the initial use of AI 3D generation products?

Way less than you’d think. Most platforms do have free levels—you can begin to experiment without a single penny. To do any serious work, entry-level subscriptions are likely to cost between $15-30/month (such as Sloyd at $15/month or Tripo AI Pro at $29.99/month). Mid-range plans cost between $50-120/month, and enterprise solutions with API access cost between $200 and above/month.

This is compared to the process of employing a 3D artist at $25-150/hour. In the case of a game that requires 1,000 assets, the conventional expenses could be as high as $100,000 and above. In the case of AI, it might be around $1,000-5,000, and that is it. That’s a 90-95% cost reduction. It is an investment that is practically self-paying.

3. What is the most appropriate AI tool to use in 3D design in 2025?

It will be determined by your needs. NVIDIA Edify 3D and Sloyd will be useful for developers of games to create game-ready and optimized assets. Architects and product designers are fond of Meshy AI because of its photorealistic texture and speedy iteration. The generative 3D of Shutterstock is ideal in case you require commercially-safe assets of an ethical nature.

The AssetGen 2.0 is the top in the quality of the materials and realistic lighting provided by Meta. In the case of novices, 3D AI Studio has the simplest interface. And when money is lean, a number of tools exist that have solid free options. Frankly, give some a trial; most of them have some free plans. The workflow and the type of project shall dictate which one shall feel right.

4. How do you train the generative AI to be used in 3D models?

Scratch training is expensive, in terms of resources—that is, hundreds of thousands of 3D models as training data, expensive NVIDIA GPUs (costing $10,000 apiece), and weeks of computation time. The majority of people do not train their models. Instead, they apply ready-made models from companies such as NVIDIA, Meta, or Shutterstock, which have already done this heavy lifting.

In the event that you do require custom training (perhaps you need a specific art style), this process consists of: gathering or generating thousands of 3D assets, converting them into a form usable by the AI architecture (GANs, VAEs, or diffusion models), executing the training process on large-scale hardware, and refining the output. This is more available in cloud services such as AWS or Google Cloud and can range in price from

5. What other uses of AI are found in the Metaverse besides 3D creation of assets?

The whole experience of the metaverse is driven by AI. In addition to the development of 3D resources, AI enables NPC (non-player characters) to talk and act. It creates changing missions and plots that change according to your preferences. AI does real-time translation among the world population. It generates personal experiences depending on your choices and habits in behavior. Procedural generation of content generates enormous virtual worlds full of natural terrain, architecture, and ecosystem.

The AI takes care of the virtual economies, detecting fraud and balancing the supply and demand. It drives avatars that are capable of reflecting your expression and movements. And it can do optimizations of real-time rendering, rendering detail based on what is visible to keep the performance smooth. The current state of the metaverse as we wish to see it—as a persistent, interactive, infinite digital universe—would simply not exist without AI taking up the heavy computing behind-the-scenes work.