Introduction of The Dark Side of AI Tools: Privacy and Data Processing

Imagine the following: you are talking to an AI assistant regarding a health issue, asking her to tell you what to do as you are experiencing certain symptoms. It is as if we were having a personal discussion. However, here is the point—that apparently harmless conversation may be feeding data to huge training programs, capable of seeing through contractors transcribing conversations, or even keeping it forever in servers that you are completely unaware of. And you’re not alone in this. We are all in this brave new world of AI offering convenience but in some cases, a nightmare of privacy instead.

Let’s be real for a second. The use of AI tools has been increasing in our daily lives more than we can keep pace. ChatGPT, Google Gemini, Meta AI, and dozens of others assure us that our life is going to be easier, as we are able to write an email, answer some questions, even do our homework. However, there is the black side of this tale that is not well discussed. The assistive replies are in the background which is a massive data harvesting activity that would be dizzying to your head.

Today, we are going to be in-depth exploring what is actually happening to your information when you are using AI tools. You will be informed of the outrageous ways in which these systems can invade your privacy, actual cases of things that have gone wrong, the statistics that will make you think thrice before entering sensitive information in a chatbot, and, what can be done to you above all, what you can personally do.

At the conclusion of this, you will have a good understanding of what you are becoming vulnerable to as well as the information you will have to be able to control your digital privacy in the era of AI.

Why AI Tools are So Data Hungry?

AI tools don’t work on magic. They act on data—vast volumes of data. Imagine terabytes and petabytes of text, images, videos, and all the other things. Such systems are fed on information and the more they are fed, the smarter they become. Innocent in a sound, eh?

However, here the situation becomes muddy. What they are consuming are healthcare records, financial data, what you post on your social media, biometrics information such as your face and voice, and even your personal conversations. A study by Stanford University also revealed that the most prominent AI firms are already defaulting on user conversations to train their models, which is to say that your conversations are no longer off the record unless you explicitly tell them to be.

This level of data collection has never been seen before. In a recent report by IBM, it was discovered that 13 percent of organizations had been breached by AI models or applications, and of the organizations that suffered the breach, a shocking 97 percent lacked proper access controls to AI. We are discussing systems that process sensitive information that is less secure than any online shopping platform.

The Three Major Issues of AI Data Collection.

When disaggregated, AI privacy concerns amount to three significant issues that seem to recur every time.

1. Data Overcollection: Stealing Way More Than They Need.

The AI companies follow a philosophy of more is better. They glean all they find on their hands since larger datasets theoretically lead to higher quality AI. But this is a direct contradiction to the fundamentals of privacy that state that you only should collect what you really need.

It can be thought of in the following way: when someone asks you some directions and he or she insists to know your bank account, health conditions, and dating habits; you will believe him or her to be insane. However, that is what is largely being done concerning AI tools. They are gathering facts that are not even relevant to your questions.

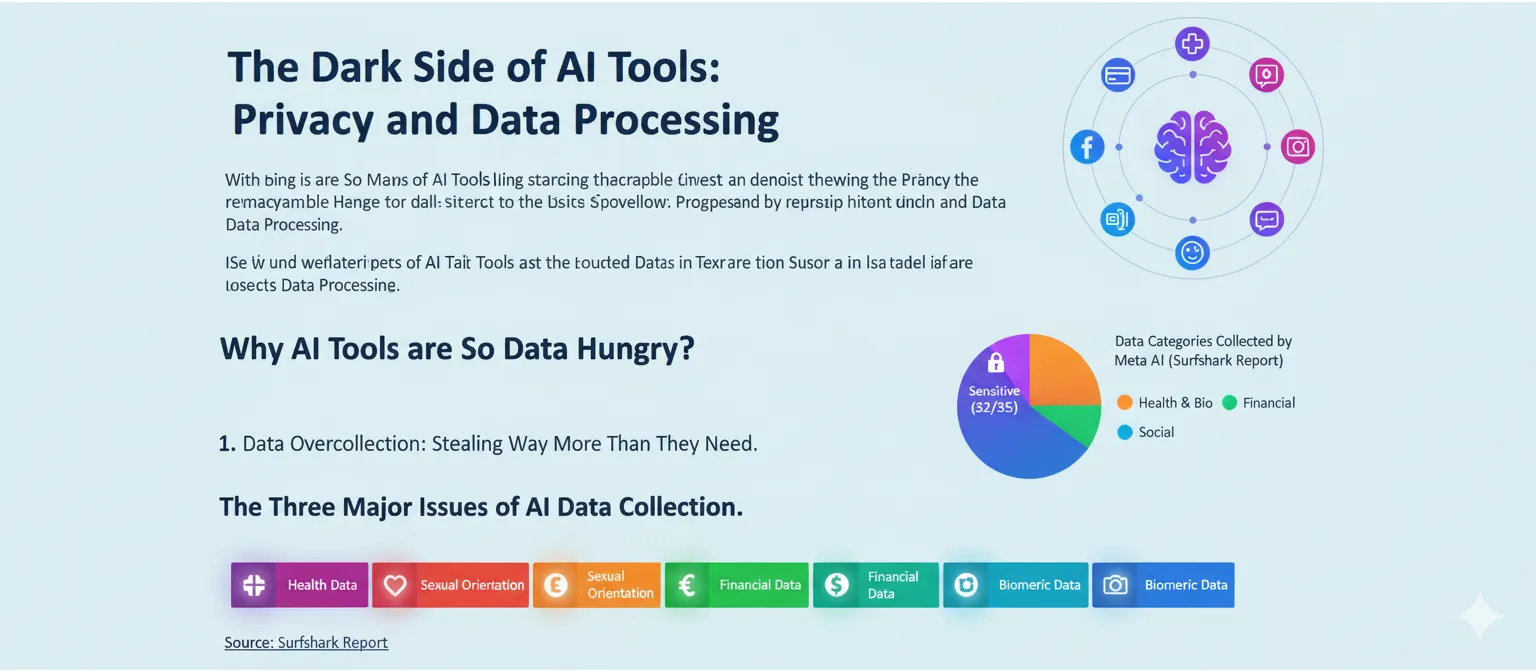

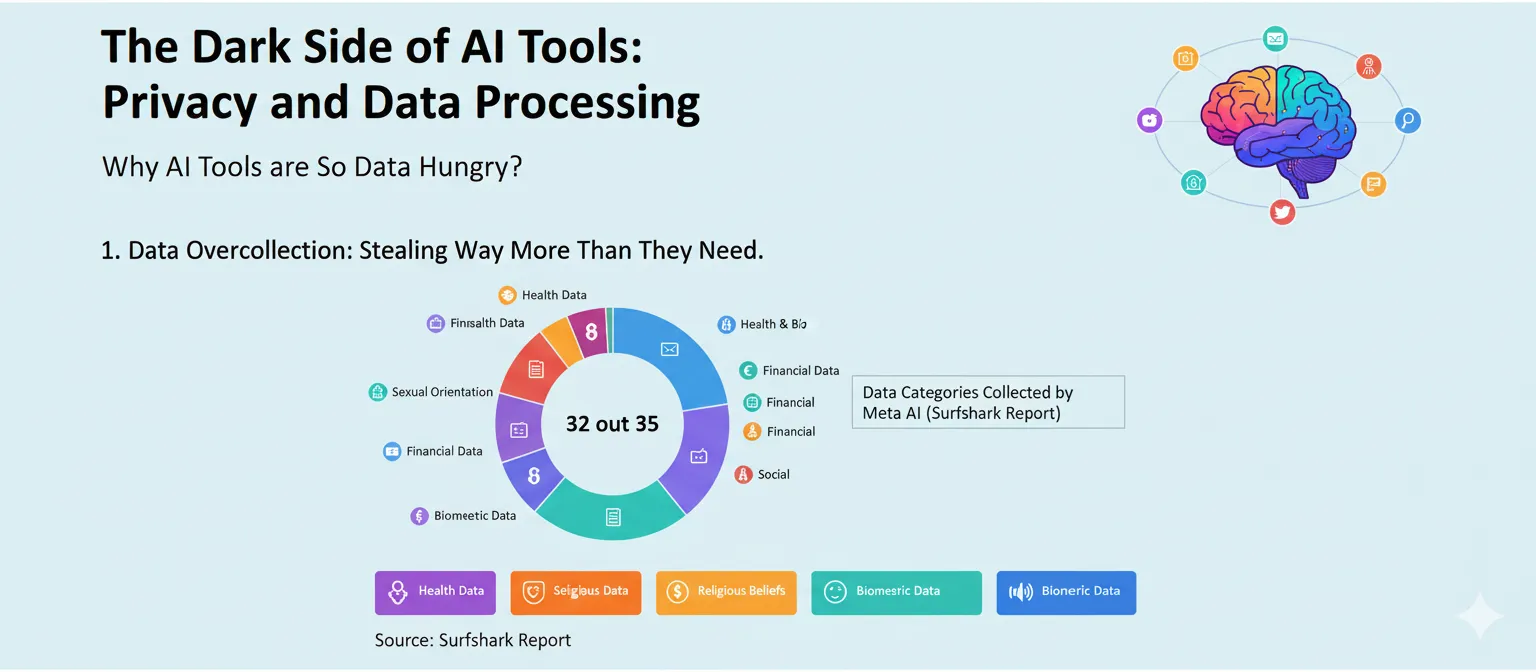

A report compiled by Surfshark reveals that 32 of 35 categories of personal data identified by the Meta AI gatherer include sensitive information such as sexual orientation, religious beliefs, biometric data, and even pregnancy data. That is not making you get more answers to your questions. That is creating a dossier of your whole life.

2. Data: Unauthorized Use of Data When Consent Has Been a Joke.

The following is likely to make you angry; in the majority of cases, AI tools are automatically selected to participate in the collection of data. You mean these long privacy agreements in legalese that no one can ever read? Hiding in there is the acceptance of these companies to train AI on your data, third-party sharing, and the general retention of the information, which in essence is indefinitely.

Recently, LinkedIn was accused of automatically defaulting users in sharing their data with Microsoft and affiliates so that they can be trained on AI. To avoid being trained on to create AI models, users were required to manually decline before a deadline or their professional accounts and work history, posts and even resume information would be used. The information about your career is being used and being used without you actively consenting to it.

This issue is even more complicated when the information gathered due to a specific use is reused in the context of the entirely different issue. One of the former surgical patients in California has found out that the photos of medical treatment were used in an AI training set. She had signed a consent form that her doctor could take the photos not to form a part of what would be used to train AI systems used by who-knows-who.

3. Data Leakage and Breaches: When Things Get Totally Out of Control.

Breaches occur on a frightening frequency even when companies say that they are handling your data carefully. On May 2023, ChatGPT suffered a data breach, revealing the data of around 101,000 customers, including their social security numbers, email addresses, phone numbers, work titles, and even payment details. Their vulnerability in the open-source Redis library is what caused the breach.

But wait, there’s more. In June 2025, the security researchers found that infostealer malware had stolen more than 225,000 OpenAI credentials and sold them on the dark web. And these are not one or two. The 2025 Data Breach Report provides the annual mean cost of breach in organizations at $4.44 million at a global level, and U.S. companies incurred even more at 10.22 million per breach.

The real kicker? The cost of breaches in organizations with high amounts of a high use of high levels of “shadow AI”—AI tools that employees operate without permission—grew by an average of $670,000. And one out of five organizations had a breach directly caused because of shadow AI.

Data Breach Statistics (2025)

| Metric | Global Average | U.S. Average |

| Annual Mean Cost of Breach (Organizations) | $4.44 million | $10.22 million |

| Cost Increase due to “Shadow AI” | +$670,000 (average) | Not specified |

| Organizations with Shadow AI Caused Breach | 1 in 5 | Not specified |

Real-life Horror Stories: How AI Privacy Has Gone Wrong.

Statistics are one thing, and the true stories are causing the point to sink. We could explore several real-life cases that demonstrate how disastrously things can unfold when AI technologies fail to handle information.

The Samsung Source Code Disaster.

This was a colossal error on the part of Samsung engineers in 2023. In an attempt to troubleshoot coding problems, they pasted proprietary source code and business internal documents they had directly into ChatGPT. Since the ChatGPT interactions are not deleted to improve the models unless specifically turned off, such engineers leaked sensitive company information to an external AI platform.

It was not an evil hack it was a good intention to work employees faster. However, it resulted in an unwanted data leaking scenario that would have cost Samsung millions had the competitors got hold of that information. The event made a number of companies prohibit or drastically restrict the usage of AI chatbots by employees.

Italy Fines OpenAI with 15.6 Million Euro.

The data protection authority in Italy did not play around when they were investigating ChatGPT. In 2025, they fined OpenAI EUR15 million regarding numerous GDPR breaches. The key issues? The processing of data without due legal foundation, the inadequate giving of notice to the users of the data collection process, and appropriate age verification that may expose the minors to unsuitable material.

OpenAI named the fine as disproportionate, which was almost 20 times their revenue in Italy when it occurred. However, the money the Italian regulator wanted was not in vain as they demanded that OpenAI should initiate a six-month campaign to educate people publicly concerning how ChatGPT collects data. That says something about the seriousness with which Europe takes AI privacy invasion.

DeepMind Patient Data Scandal.

The DeepMind of Google received significant criticism when it was revealed that they had partnered with hospitals in the UK to retrieve and analyze patient data without the necessary transparency or consent. The event provoked some very important questions: Who owns patient data when used by AI? What are the rights of the patients when their health data is inputted into machine learning systems?

This scandal exposed a core issue with AI in health care: the standard forms of consent are silent on the multifaceted uses, reuses and training of AI systems on patient data. Instead of acting as a commercial participant in the development of AI, the patients believed they were accepting to be treated.

Meta AI: The Ultimate Data Vacuum.

The latest research found out that Meta AI is the most greedy chatbot available in the market. It is the largest AI assistant that gathers financial, health and fitness, racial or ethnic, sexual orientation, pregnancy data, disability status, religious beliefs, political opinion, genetic information, and biometric data.

To make things even worse, Meta contractors confessed that they have access to Facebook users who share with AI chatbots extremely personal information and pictures, such as explicit photos, personal medical history, and intimate conversations. This is stuff that is being looked through by these contractors under the quality control procedure that Meta uses, that is, your secretive AI conversations may be under the scrutiny of human eyes. Sensitive Data Categories Collected by Meta AI (Surfshark Report)

Sensitive Data Categories Collected by Meta AI (Surfshark Report)

The Bias Issue: Spectacular AI Prejudices.

Violation of privacy is one thing, but the other pernicious threat of AI data is discrimination. Since AI systems are trained using historical data, they propagate and increase the biases inherent in the data they are trained with.

Algorithms in Healthcare That Are Biased to Whites.

An algorithm applied to over 200 million individuals in U.S. hospitals was discovered to highly approve white individuals as compared to black patients in predicting whether they required additional medical attention. The problem? The algorithm was based on medical need which was in the form of healthcare spending. Since the black patients had less access to care and less spending in health care, they were mistakenly identified as less at risk even when they had the same or more health issues.

This prejudice decreased the amount of Blacks identified to receive care over 50 percent. It is not a small bug—that is an artificial intelligence system that is actively promoting healthcare inequality on a large scale.

A Facial Recognition That Will Not Recognize Everybody.

Joy Buolamwini, a researcher at MIT, had found that major technological companies misidentified women of color at disproportionately high rates compared to light-skinned men. The error rates of dark-skinned women were as high as 35, whereas the light-skinned men made the error rates in the range of less than 1%.

This is not an issue of accuracy, it is a civil rights issue. Law enforcement through these biased facial recognition systems results into the wrong arrests. There are several instances of people of color who have been wrongly arrested due to AI systems being unable to recognize them.

Artificial Intelligence Recruiting Devices That Discriminate Against Women.

The Amazon AI recruitment system was forced to abandon its system when the company found that it was discriminating against candidates who mentioned the word women and those who were graduates of women-exclusive colleges. The AI was trained on historical hiring data which was biased towards men thus becoming sexist.

Recent case Workday has been sued claiming their AI-based applicant screening system was discriminating against job seekers due to their age, race, and disability. The case is at the discovery stage and Workday will be required to provide information regarding its AI screening procedures. This would be a template of future litigation of AI bias, should this succeed.

Education and Healthcare Privacy Issues: Where It Meets the Home.

In case the AI processes information that is associated with sensitive areas, such as education or healthcare, the risk is even more significant. These are not mere theoretical problems about privacy but find application in the lives of real individuals who are in vulnerable circumstances.

Artificial Intelligence in Learning: The Data of Your Child Is Sold.

The use of AI in schools is quickly becoming popular in grading and customized learning. What many parents do not know, however, is that these tools are gathering huge amounts of information about their children; learning habits, a behavioral history, schooling, disciplinary history, and even emotional histories through emotion recognition AI.

The problem? Not a lot of school districts have effective policies outlining the use of AI data. Educational AI technologies may be selling student information to third parties, targeting advertisements, or storing it long after children get out of school. and children are not in a position to make any meaningful consent to data collection procedures in which they are not knowledgeable.

Healthcare AI: The Trainers of Your Medical Data Train Robots.

AI in healthcare entails the use of secured health data and other irrelevant health data such as fitness tracker data, search history, and shopping habits. That information can be potentially re-identified by cross tabulating with other sets of data, even when necessary identifiers are taken out to meet the requirements of laws such as HIPAA.

This brings about quantifiable damages and psychological damages. Quantifiable damages involve being the target of discrimination in the workplace in the case of disclosing medical history, or having to contend with bloated insurance rates in the event of a health breach revealing your illnesses. The psychological damage is that it gives one the sense of losing control over information that is highly personal even when such data is not actively abused.

The following is a situation to be concerned about: an AI may forecast the future occurrence of an event related to health, depending on your behavior and lifestyle patterns. Although such a move might never materialise, the likelihood test may have clinical, social, and working ramifications. Insurance companies, employers or others may make decisions about you depending on what an algorithm believes may occur to your health.

The Regulatory Environment: Who Is Attempting to Clean This Mess?

The positive aspect is that the governments all over the world have begun to open their eyes to the risks of AI privacy. The bad news? There is a patchwork of regulations, which are frequently vague on how they can apply to AI, and regulation is lagging behind technology which is changing at an alarming pace.

GDPR: Europe’s Privacy Hammer

The General Data Protection Regulation of the European Union is the most effective law on data privacy. GDPR involves consent to data collection being explicit, and the collection of data must have a purpose and be only in accordance with a particular purpose, a person may access and remove data, and if a violation occurs, the penalty is gigantic, reaching up to 4% of the annual income of the organization.

In the case of AI, the concepts of data minimization, purpose limitation, and transparency set by GDPR pose a serious challenge. AI systems that feed on large amounts of data have to work hard to make sure that their data appetite does not exceed the legal limit of the data that they need to gather. It is also not easy to explain to people how AI systems process personal data clearly which an organization is required to do, and even those developing the models cannot fully understand how a complex AI model can lead to a particular decision.

The EU AI Act: New Rules of Transparency.

By August 2025, the EU AI Act has presented disruptive transparency obligations on providers of AI models. Companies are now required to post the comprehensive descriptions of the data on which they were training their models, such as:

Modality types of data (text, images, audio, video)

Data volume and data sources.

Use of either user or synthetic data.

Measures have been observed to prevent the use of copyrighted or illegal material.

Failure to comply may lead to a fine of up to 3% of the world turnover. Such transparency policies are supposed to provide individuals with an insight into whether their copyright materials have been utilized, enable regulators to measure biasness and risk, and assist downstream consumers to make well-informed choices in regards to AI systems.

CCPA and U.S. State Laws: A Patchwork Approach.

The US lacks extensive federal AI privacy law as yet. Rather we have a mosaic of state legislation spearheaded by California Consumer Privacy Act. CCPA ensures that the California residents have a right to learn about the collection of their personal data, to request not to have their data sold, demand the deletion of their data, and not to be discriminated against based on their exercise of their rights.

The most important similarity between GDPR and CCPA? GDPR must be expressly opt-in, whereas CCPA is opt-out. That may seem like a minor difference but that is enormous in reality. Through the opt-out systems, companies are able to use and gather your information by default before you realize and take action to prevent their use.

At present, no federal law exists that governs the development of AI in the U.S. in detail. Different bills have been suggested such as the AI Research Innovation and Accountability Act and the American Privacy Rights Act, which have not yet been passed. This places both companies and consumers in a state of confusion on what they actually need and what rights to which are they entitled.

How to Really Safeguard Your Privacy with AI Tools.

Alright, now we have discussed the issues. Now let’s talk solutions. And you are not powerless in this situation, as there are real actions that you can currently undertake to safeguard your personal information against the AI excess.

Quit AI Training (Does Major Tech Have an AI Training Opt-Out Feature?)

The majority of large AI platforms allow you to choose whether to have your dialogues used to train and they do not make it very easy or obvious. Here’s your step-by-step guide:

ChatGPT:

Unaccounted web users: Click on the Settings and deselect the option of improving the model to every user.

Users that are logged in: Choose ChatGPT > Settings > Data Controls > Disable Chat History and Training.

Mobile applications: settings, Data Controls, Turn off Chat History and Training.

Google Gemini:

Open Gemini in your browser

Click Activity

Choose Turn Off drop-down menu.

Switch off Gemini Apps Activity or turn off and remove conversation data.

Microsoft Copilot:

Go to your Microsoft account.

Find Copilot interaction history or Copilot activity history

Hit delete (NB: no explicit opt-out, but can delete interactions)

Meta AI:

This is a more difficult one—Meta does not have many choices to opt out of it.

Be very careful of the information you share.

Do not consider Meta AI on sensitive subjects.

Perplexity:

Manage your account.

Find information training or privacy settings.

Switch off AI training data usage.

LinkedIn:

Go to Settings and Privacy Data privacy Data to improve Generative AI.

Switch off Use my data to create training content AI models.

Formal objection, Data Processing Objection request should be considered.

What to Never Tell AI Chatbots.

There are also certain forms of information that must under no circumstances be fed to AI chatbots, however inviting the help is:

Banking or credit card numbers, expiration dates or CVV.

National identification numbers or social security numbers.

Health records, diagnosis or detailed medical records.

Work trade secrets or code of proprietorship.

Sensitive documents in legal forms.

Nude or sexual impropriety.

Details of children, such as names, schools/photos.

Imagine AI chatbots as you would be conversing in a busy social environment. Unless you can scream it in a crowded coffee shop, do not type it in an AI.

Always use powerful passwords to each account and under the best practices, use multi-factor authentication. Passage manager simplifies this direction.

Look at the permissions of reviews periodically. Check the phone and computer settings to determine what apps can access your location, contacts, camera and microphone. Withdraw unnecessary permissions.

Limit social media sharing. Note that posts popular on Reddit, Facebook, Instagram, Twitter as well as blogs have been scraped publicly, probably to train AI models. That is something that you can not undo and you can be more considerate in the future.

(Or at least) read privacy policies. Yes, they are dull, but it is five minutes of reading worth knowing whether a tool is training on your data to use AI.

Anonymous browsing should be used where possible. Privacy browsers such as Brave or DuckDuckGo are more secure than mainstream browsers.

Periodically check data breaches. Seven websites such as Have I Been Pwned allow you to check whether your email addresses or passwords are involved in any of the known breaches.

To Businesses: Responsible AI Building.

When you are in charge and operating a business, or you are making decisions regarding AI tools in the workplace, then you have your extra duties.

Develop an overall policy on AI usage that clarifies what is and is not allowed, with ethical usage and data privacy in mind. This policy ought to be related to data management, explainability of models, user consent and risk management.

Complete Privacy Impact Assessment of AI systems prior to implementation. PIAs determine possible privacy risks at every stage, meaning data collection, processing, storage and deletion and can be used to make sure you are only using as little data as you need.

Use data minimization as a fundamental value. Gathering data is not a reason to do it. Take only that you really require to do legitimate uses.

Create an AI privacy group that consists of legal experts, data scientists, and ethics professionals that can keep the compliance rates in check and keep up with the changing regulations.

Screen your AI suppliers. Migration Before handing over your data to a third-party AI platform, read their privacy policy and their security practices. Do they sell your data? Who are their partners? Get these answers in form of data processing agreements.

Educate employees about privacy dangers of AI. The case of Samsung occurred due to the fact that the engineers were not aware of the privacy issues of the pasting of code into ChatGPT. These errors are avoided through education.

AI Privacy: The Future of Artificial Intelligence.

The AI privacy market is changing fast. This is what analysts expect in the near future.

New Privacy-Saving Technologies.

New technical solutions are being developed that will enable AI to learn on data and still provide privacy:

Differential privacy injects noise in datasets in such a way that AI is able to learn general trends but not single data points. It is similar to looking at a picture that is not very clear, you only have an idea about the general picture but you cannot read certain license plates or faces.

Federated learning also learns AI models on multiple devices or servers and does not centralize the raw data. Your phone studies your data on your phone, and then it only communicates with what it has learned but not your data itself.

Homomorphic encryption enables AI to calculate its operations with encrypted data without the need to decrypt data ever. The model learns without visualizing the actual data.

Synthetic data generation generates and produces artificial datasets that have similar statistics to the real data but do not provide any real personal information. Artificial patients or customers do not jeopardize the privacy of real people because AI can be trained on them.

These technologies are not common at the moment, but they are the future of privacy-friendly AI. Firms that invest in such strategies will be at competitive edges since privacy laws become tighter.

Talent Rules and Implementation.

Governments all over the globe are not only leaving behind the general laws on data protection and devising AI-specific legislations. We’re likely to see:

Mandatory algorithmic audits in order to identify prejudice and privacy breaches.

Human supervision of high stakes AI decisions.

Tighter age-checking to save children.

Uniform disclosure of information on what data AI processes.

Stricter fines on violations—we are speaking tens of millions not thousands.

The system of patchwork state laws in the U.S. is likely to move towards federal laws in the long run. The compliance with 50 various state laws is a nightmare, and it is already being demanded by businesses.

Increasing Consumer Sensitivity and Counteraction.

Individuals are becoming aware of AI privacy threats. According to a Cisco Consumer Privacy Survey, two-thirds of consumers (62%) are worried about the manner in which organizations use their data in AI applications. It is not a marginal group—it is the majority.

We are witnessing increased litigation, pressure of people, and increase in numbers of people opting to use privacy-conscious alternatives. Firms that remain active in the data harvesting activities will lose their reputation, and customers, and risk legal suits.

Striking a Balance: Innovation, but Not Exploitation.

This is the problem that no one wants to admit, the very essence of AI and meaningful privacy protection is in conflict. The fact is that AI actually improves as data increases. However, additional information could not be the solution when data comes at the cost of the basic rights of people.

It is not to give up on AI—the cat is out of the bag on that one. The way out is to develop AI in a responsible manner. That implies privacy by design, but data protection is not an additional feature tacked on after the software has been built. It implies transparency, in which companies can communicate effectively what information they gather and why. It implies responsibility, which is accompanied by tangible penalties in case of transgressions.

Above all it is taking back the power to control personal information. It is up to you whether your health concerns, financial issues, or creative thoughts will be the data used to train corporate AI. Such a ruling should not be lost in 47 pages of legal nonsense that no one will read.

Take Action Now

We have traveled far enough here, and you are a little dizzy in the head, which is natural. Nevertheless, information overload is no excuse to stay idle. The following are the things you must do immediately you have completed this article:

Use 30 minutes to choose what you do not want to undergo on AI training on the most frequented platforms. Please take the particular directions as we have given previously.

See what you have already told the AI chatbots. In case you talked about sensitive information, delete these dialogs and change the corresponding passwords (where necessary).

Establish new routines regarding the use of AI. Your question before typing something into a chatbot should be: “Would I be comfortable having this information out there? If not, don’t share it.

Pass it on to friends, relatives, and other workmates. None of them know that their AI talks are being used to train their future models or are being examined by contractors.

Keep up with the changes that take place in this space. Subscribe to privacy-oriented media outlets and read up on the state of regulation in your area.

The evil aspect of AI tools is not unavoidable. However, you have to protect yourself by being aware, deliberate and with a skeptical mind when it comes to sites which claim to be convenient and yet steal away the most intimate information about you without your knowledge. Now you know—now make use of it.

Wrapping It All Up

Artificial intelligence has changed the manner in which we work, learn and deal with technology. They are not leaving and, frankly speaking, they do not need to. However, the present-day situation with AI privacy is not acceptable. Firms that gather 32 categories of personal information such as sexual orientation and religious beliefs, contractors looking over your intimate AI chats, and breaches exposing hundreds-of-thousands of users—this is not the cost that we have to pay to enjoy beneficial chatbots.

The good news? Change is happening. The regulations are becoming stricter, privacy protection technologies are rising, and the customers are expecting more. Firms that persist in seeing user data as a veritable buffet will suffer more and more repercussions, both legal and financial, as well as reputational.

Your role in this? Be educated, defend your information and do more of AI companies. Whenever you decide not to participate in data collection, whenever you decide not to reveal some of your sensitive information, whenever you raise a question with a company about the way they collect the data you are part of the solution.

The negative aspect of AI does not necessarily prevail. However, it will not get away without individuals like you giving privacy a serious consideration and holding these influential platforms to task.

FAQ’s

Q1: Do AI firms know every word that I type in chatbots even when I am on a private mode?

Yes, in most cases. Regardless of whether you are in an incognito mode on your browser or have not made an account, all your texts are usually served on the servers of the company and can be recorded, kept and possibly read by human contractors. The incognito features guard your personal computer against recording history yet fail to stop the AI firm to access your information on their side. Certain applications such as ChatGPT do provide a setting to turn off history and training, although these have to be turned on.

Q2: When I erase my history of AI chats, am I sure they are lost?

Not necessarily. In most AI apps, when you remove chat history, the history disappears on your screen, however, it can be stored in the backups, logs, or already trained training data. Some data are usually retained by the OpenAI and other firms to monitor their security and legal requirements even after they have been deleted. Provided that your talks had already been used to train a model prior to being deleted, the training is irreversible—the model has been trained on your words.

Q3: Does paid AI subscriptions such as ChatGPT Plus have superior privacy to free ones?

Not as much as you’d think. Though subscriptions with paid plans tend to include certain extra privacy settings, probing reveals that ChatGPT Plus and Google Gemini Advanced both continue to use the information of their subscribers to train their models unless you choose the option. Users that are paid are also not spared of the possibility of human monitoring of the conversations with an intention of detecting abuses or legal reasons. Privacy advantages of paid versions are often enhanced customer support in cases of privacy concerns, rather than essentially different data practices.

Q4: Does my employer monitor my queries on my work computer by AI chatbots?

Absolutely. When you use a company computer or are on a company network, your employer can probably be able to track your usage of an AI chatbot. Most organizations have a software that will issue monitoring of the websites visited, applications used and even the keystroke logging.

Other organizations have put in place certain policies and AI monitoring tools with the sole purpose of identifying those employees who are posting sensitive company information to other AI-based platforms. In all cases of uncertainty, believe that everything on a work machine or a network could be seen by your employer.

Q5: What is shadow AI and why should I be interested in it?

Shadow AI is AI tools employed by an employee or any other person without authorization or control by his or her IT department. It is referred to as shadow since it does not work within official channels and visibility. This is something to be concerned about because shadow AI elevates the risks of breach by a factor of many times over—organizations with a high level of shadow AI use incur breach costs that are on average more than $670,000 higher.

It is also equivalent to the fact that such tools have not been checked in terms of being security vetted, compliance vetted, or data handling practices. When you deploy an AI tool at work that your IT department has no knowledge of, then you are making shadow AI and may be exposing sensitive business information to outside sources without the established safeguards.